RSA Gradually Leaves The Stage After More Than 40 Years As A Leader — And It’s Its Friend (TLS)…

RSA Gradually Leaves The Stage After More Than 40 Years As A Leader — And It’s Its Friend (TLS) That Is Pushing It Off

Introduction

Like a piece of duck tape holding together a running machine, cryptography is fixing many of the problems that we have created within our digital world. But it also has a much greater role— to build a better world. Once we fix the running machine, we must build a better machine, and which doesn’t need duck tape anymore.

The world of cryptography seems to be getting near to a point that it is picking its favouriates. For symmetric (secret) key we have AES. In hashing methods it is SHA-256 and SHA-3 (Keccak) that are winning. And, for public key, the only method in town just now for new applications is elliptic curve. All that could change, of course, with the advent of quantum computers, but, for now, a new world is being built with these methods, and they are all working seamlessly to build a new world.

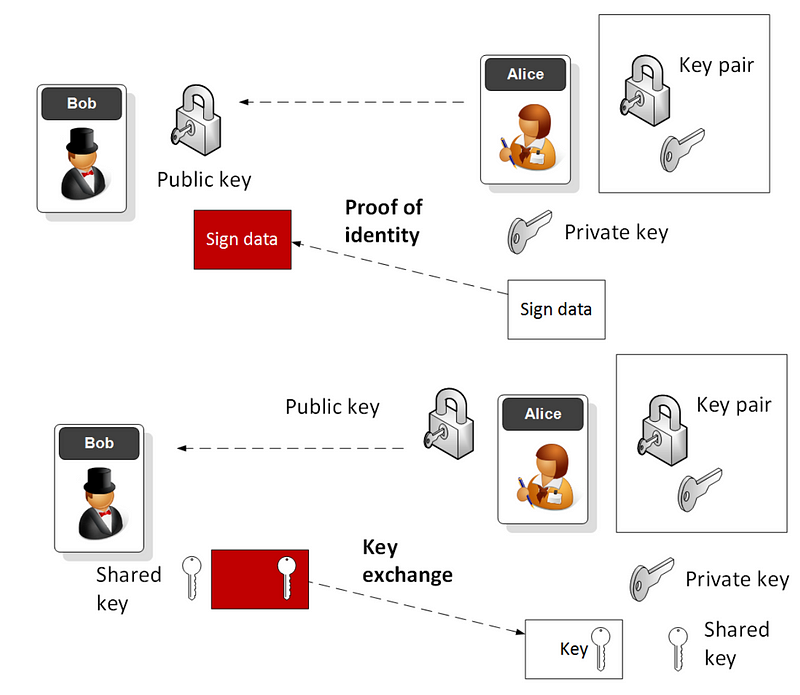

And so public key methods are left with two main functions: signing for transactions/proving identity — where the private key is used to encrypt a hashed value of an entity and the public key then verifies it — and in key exchange. RSA plays a part in both of these, and where we often see our digital certificates containing RSA keys. But, in key exchange, it is getting wiped out, as it is just so slow. It’s friend has been TLS, but the new standard for TLS (TLS 1.3) is pushing RSA off the stage for key exchange.

But one of the few remaining areas that RSA is still used is in the long legacy trail of SSL/TLS, and RSA must thank NetScape — all those years ago — for adopting it as a standard for SSL 1.0.

Goodbye — finally — to RSA?

The world has fallen in love with elliptic curves, and you’ll see it as the key exchange method of choice (ECDH — Elliptic Curve Diffie Hellman) and where it has pulled up the Diffie-Hellman method by its bootstraps, and made it fit for this modern era. For signing, too, it is ECDSA (Elliptic Curve Digital Signature Algorithm) that rules the roost for all new applications.

Elliptic Curve is at the core of the Tor network, and within Blockchains, and it is protecting your smart card. So why is it winning? Well the core protection within RSA is the factorization of a modulus (N). This is created from the multiplication of two prime numbers. If this can be factorized, RSA is broken.

But RSA still has a friend: the TLS standard used in HTTPs, and where it is one of the methods which is used for key exchange and for the signing process. Most of the certificates that are purchased still use RSA keys.

Unfortunately, RSA has had to keep up with crypto inflation, and where the cracking hardware improves by the day. So with 512-bit RSA keys being completely untrusted, and with 1,024 bits keys are going the same way , we now end up with massive 2,048 bit key. This has a significant affect on the CPU, as it must deal with massive numbers. This is 2 to the power of 2048:

32,317,006,071,311,007,300,714,876,688,669,951,960,444,102,669,715,484,032,130,345,427,524,655,138,867,890,893,197,201,411,522,9 13,463,688,717,960,921,898,019,494,119,559,150,490,921,095,088,152,386,448,283,120,630,877,367,300,996,091,750,197,750,389,652,1 06,796,057,638,384,067,568,276,792,218,642,619,756,161,838,094,338,476,170,470,581,645,852,036,305,042,887,575,891,541,065,808,6 07,552,399,123,930,385,521,914,333,389,668,342,420,684,974,786,564,569,494,856,176,035,326,322,058,077,805,659,331,026,192,708,4 60,314,150,258,592,864,177,116,725,943,603,718,461,857,357,598,351,152,301,645,904,403,697,613,233,287,231,227,125,684,710,820,2 09,725,157,101,726,931,323,469,678,542,580,656,697,935,045,997,268,352,998,638,215,525,166,389,437,335,543,602,135,433,229,604,6 45,318,478,604,952,148,193,555,853,611,059,596,230,656

And so RSA is still hanging on within digital certificates, and in signing for identity. This is because the overhead in the signing process is not massive, as we just encrypt a small amount of data (the hashed value). But in key exchange, the method is not really fit for our modern world, filled with IoT devices.

For years, in our HTTPs connection we have used key exchanges which have involved either a Diffie-Hellman approach (such as with ECDH), or where one side generates a secret key, and then encrypts with the public key of the other side. This has been the core of SSL/TLS. But not with TLS 1.3. RSA has been kicked-out of the key exchange process, and will be pushed aside as a way to generate the key used in the HTTPs tunnel.

Why? Because it’s just too slow, and it doesn’t support forward secrecy (and where a long term breach of the core keys cracks all the keys derived from them). And so RSA leaves the stage a little bit more. The only method for key exchange which will be supported in TLS is ephemeral Diffie-Hellman, and which will protect keys, even with the cracking of a long-term master key.

A Bit of History

In August 1977, The Stranglers were in the charts with “Something Better Change” and something really was changing, and it something that would change the world forever. This was the month that Martin Gardner, in his Scientific American column, posted a challenge of a method that has stood the test of time: RSA.

It related to the work of R(ivest), A(dleman) and S(hamir) and was a puzzle on their discovery of a method which allowed two keys to be created, where one could encrypt, and the other to decrypt. Their work had been based on a proposal from Whitfield Diffie and Martin Hellman on trapdoor functions that could be used to create the key pair.

In order to explain the RSA concept, Martin’s provided a background the Diffie-Hellman method for which he outlined:

Then in 1975 a new kind of cipher was proposed that radically altered the situation by supplying a new definition of “unbreakable.” a definition that comes from the branch of computer science known as complexity theory. These new ciphers are not absolutely unbreakable in the sense of the one-time pad. but in practice they are unbreakable in a much stronger sense than any cipher previously designed for widespread use. In principle these new ciphers can be broken. but only by computer programs that run for millions of years!

Overall the Diffie-Hellman method has had a good run, but it has struggled in recent years to keep up with the processing power for computers, and the millions of years of running is not quite the case in the modern area, and where the original ciphers could now easily be broken with the simplest of computers within minutes.

With the RSA method, Martin Gardner outlined:

Their work supported by grants from the NSF and the Office of Naval Research. appears in On Digital Signatures and Public-Key Cryptosystems (Technical Memo 82. April. 1977) issued by the Laboratory for Computer Science Massachusetts Institute of Technology 545 Technology Square. Cambridge Mass. 02139.

The memorandum is free to anyone who writes Rivest at the above address enclosing a self-addressed. 9-by-12-inch clasp.

On receipt the requesters eventually (it took over four months in many cases) received a precious piece of history:

It seems unbelievable these days, but the original methods were based on two 63-digital prime numbers that would be multiplied to create a 126-digit value:

Contrast this with the difficulty of finding the two prime factors of a 125- or 126-digit number obtained by multiplying two 63-digit primes. If the best algorithm known and the fastest of today’s computers were used, Rivest estimates that the running time required would be about 40 quadrillion years’

A 256-bit number, at its maximum, generates 78-digits [here]:

115,792,089,237,316,195,423,570,985,008,687,907,853,269,984,665, 640,564,039,457,584,007,913,129,639,936

The 40 quadrillion years has not quite happened, and where 512-bit keys are easily broken in Cloud. If you are interested, here is a 512-bit integer value and which has 148 digits, such as [example]:

13,407,807,929,942,597,099,574,024,998,205,846,127,479,365,820,5 92,393,377,723,561,443,721,764,030,073,546,976,801,874,298,166,9 03,427,690,031,858,186,486,050,853,753,882,811,946,569,946,433,6 49,006,084,096

The search for prime numbers, too, has been progressive since 1977, and by 2014, the world discovered a 17,425,170-digit prime number. The finding of prime numbers make the finding of them in the RSA method must easier.

So the RSA method has been under attack for years, from both discovering prime numbers and also in factorizing. Along with this computing power has increased massively. If think that 40 years that have passed, and take a quick assumption that computing power doubles every year then we get:

1977 4 Quadrillion Years (4,000,000,000,000,000)

1978 2 Quadrillion Year

1979 1 Quadrillion Year

...

2015 3,637 years

and if we get an NVIDIA card with 4,000 processors, we take it to less than a year, and we get of few of them today into a cluster, and we crack it within one day! The FREAK vulnerability was actually caused by the limiting of RSA keys, due to US Export controls, to 512-bits [view].

The factorising of prime numbers too has generated methods which can quickly find the prime number factors [here].

The Tension of Crypto and Academic Freedom

Once Martin had published the article, the requests for the article came rushing in, especially as the paper had not yet appeared in the Communication of the ACM. Initially there were 4,000 requests for the paper (which rose to 7,000), and it took until December 1977 for them to be posted.

Why did it take so long to get the paper published and also to send them out?

Well the RSA method caused significant problems within the US defence agencies. This was highlighted in a letter sent from J.A.Meyer to the IEEE Information Theory Group on a viewpoint that cryptography could be violating the 1954 Munitions Control Act, the Arms Export Control Act, and the International Traffic in Arms Regulations (ITAR), and could thus be viewed equivalent to nuclear weapons. In even went on to say that:

Atomic weapons and cryptography are also covered by special secrecy laws

The main focus of the letter was that any work related to cryptography would have to be cleared by the NSA before publication. In fact, the letter itself had been written by Joseph A Meyer, an employee of the NSA.

Joseph had already been embroiled in controversy with a proposal to fit a tracking device to the 20 million US citizens who had been associated with crime. The tag would then be used to monitor the location of the “subscriber”, and to detect when they broke a curfew or committed a crime. In this modern era of GPS tracking of everyone’s phones, Joseph’s dream has actually become a reality, but now everyone is monitored.

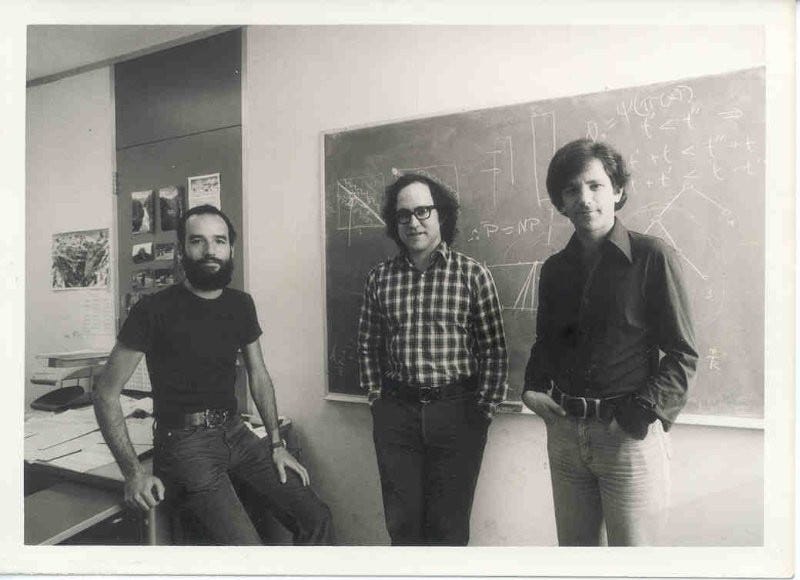

The RSA team thus had a major dilemma, as many of the requests for the paper come from outside the US. Martin Hellman, who was a co-author of the Diffie-Hellman method, had already had problems with ITAR, and even decided to present thepaper himself in 1977 at Cornell University rather than the practice of letting his PhD students present the work.

His thinking was that the court case would be lengthy, and that it would damage his PhD student’s studies (Ralph Merkle and Steve Pohlig), and so he stood up for academic freedoms. Initially the students wanted to present their work, but their families did not think it a good idea. Eventually though, Ralph and Steve stood beside Hellman on the stage to present the paper, but did not utter a word.

With this stance the cryptographers held ground, and hoped that a stated exemption on published work within ITAR would see them through. The worry, though, did delay the paper being published, and for the posting of the article. In reply to Meyer’s letter, the IEEE stood its ground on their publications being free of export licence controls, with the burden of permissions placed on the authors:

and then additional response from the IEEE say them put in place safeguards for the publishing of material which did not have the correct level of permission:

The scope of the impact of RSA was perhaps not quite known at the time with Len Adleman stating:

I thought this would be the least important paper my name would ever appear on

In fact, Adleman has said that he did not want his name on the paper, as he had done little work on it, but he did insist that his name went last. Often papers, too, have an alphabet order, and if so the method could have been known as the ARS method … not the kind of thing that you would want to say to audiences on a regular basis.

As another contribution, Ron Rivest came up with the concept of Bob and Alice, and for Bruce Schneier to then introduce the concept of Eve (the intruder), Trend (the trusted agent) and Mallory (the active attacker). If you’re interested, here’s Bob, Alice, Eve and Trent all working together:

Conclusions

For RSA, the exit from key exchange is another nail in its coffin, and is one more reason that it will eventually leave the stage. With TLS 1.0 still being supported on some sites (and even SSL 2.0), it might take a while to leave, though.