“FIXME: Not A Good Idea To Do This!” … Hunting For Bugs in Comments

“FIXME: Not A Good Idea To Do This!” … Hunting For Bugs in Comments

If you read some source code, and you came across comments such as this, you would probably worry that the code wasn’t that professional:

/* FIXME: setting this via a completely different prototype seems like a crap idea */

/* NB: if compression is in operation the first packet

* may not be of even length so the padding bug check

* cannot be performed. This bug workaround has been

* around since SSLeay so hopefully it is either fixed

* now or no buggy implementation supports compression */

/* actually a client application bug */

al=SSL_AD_ILLEGAL_PARAMETER;

SSLerr(SSL_F_SSL3_GET_SERVER_HELLO,SSL_R_ATTEMPT_TO_REUSE_SESSION_IN_DIFFERENT_CONTEXT);

goto f_err;

}

s->hit=1;

}

else /* a miss or crap from the other end */

But this from a program that plays a core part of the security of the Internet: OpenSSL. Its C++ code takes a bit of reading to understand, and it has been held together by part-time developers with little in the way of funding.

So this week a researcher at Qualys spotted a new bug from reading the comments on a code review of OpenSSH:

delay bailout for invalid authenticating user until after the packet containing the request has been fully parsed. Reported by Dariusz Tytko and Michal Sajdak; ok deraadt

and where the code may inform an intruder to test if a user exists or not. The researcher then identified a bug in this code:

87 static int

88 userauth_pubkey(struct ssh *ssh)

89 {

...

101 if (!authctxt->valid) {

102 debug2("%s: disabled because of invalid user",__func__);

103 return 0;

104 }

105 if ((r = sshpkt_get_u8(ssh, &have_sig)) != 0 ||

106 (r = sshpkt_get_cstring(ssh, &pkalg, NULL)) != 0 ||

107 (r = sshpkt_get_string(ssh, &pkblob, &blen)) != 0)

108 fatal("%s: parse request failed: %s", __func__, ssh_err(r));

The focus is on generating a malformed packet and then there will be two possible returns:

- If the user does not exist, then the userauth_pubkey() function returns a SSH2_MSG_USERAUTH_FAILURE message.

- If the user exists then sshpkt_get_u8() fails and the fatal method is called, and the program shuts the connection.

In this way an attacker can scan for valid usernames on the system. OpenSSH is integrated into many products and systems, and on the server it includes the main SSH service (sshd) and for secure FTP (sftp-server). It also integrates other tools such as ssh, scp and sftp.

The Greatest Security Bug Ever!

Another open source program which has caused many problems is OpenSSL. It has never actually progress to Version 1.2, but has been responsibly for some pretty significant bugs. One of the most significant is these was Heartbleed.

OpenSSL was started with Eric A Young and Tim Hudson, in Dec 1998, who created the first version of OpenSSL (SSLeay — SSL Eric AYoung), which then became Version 0.9.1. Eric finished his research and left to go off and do other things, and was involved with Cryptsoft (www.cryptsoft.com) joining RSA Security, where he is currently a Distinguished Engineer. After Eric left, it was then left to Steve (from the US) and Stephen Henson (from the UK) to continue its development through the OpenSSL Software Foundation (OSF).

The code continued to grow with TLS support added to 1.0.1a on 14 March 2012. Unfortunately, a bug appeared on 1 Jan 2012 which implemented the Heartbeat protocol (RFC 6520), and which ended-up resulting in Heartbleed (Figure 1). The bug was actually introduced by the same person who wrote the RFC — Robin Seggleman, a German developer.

For this, Robin implemented Heartbeed which sent a Heartbleed request which specified a certain payload and then asked the other side to echo it back again — which is probably the most pointless network protocol ever created. the implementation was sloppy, and there were no checks on the actual size of the payload sent. What happened was that OpenSSL tried to fill the memory with data that wasn’t there, and then read it back. This lead to a buffer under run, which meant that OpenSSL read from an area of memory that had data in it from other programs (and which hadn’t been cleared — as most developers don’t clear the memory once programs are finished with it).

So the payload that went back contained things like usernames and passwords, and, worst of all, private keys for the server. This threat caused millions of digital certificate updates, and caused panic in many companies, as intruders could pretend to be them, and sign things that would pretend to be them.

You basically start to worry about the code when you see comments like this:

/*

* XXXX just disable all digests for now, because it sucks.

* we need a better way to decide this - i.e. I may not

* want digests on slow cards like hifn on fast machines,

* but might want them on slow or loaded machines, etc.

* will also want them when using crypto cards that don't

* suck moose gonads - would be nice to be able to decide something

* as reasonable default without having hackery that's card dependent.

* of course, the default should probably be just do everything,

* with perhaps a sysctl to turn algoritms off (or have them off

* by default) on cards that generally suck like the hifn.

*/

Buffer underrun (or memory over-read)

The C/C++ programming language is often the standard method for creating cryptography code, as it is fast and it allowed for the direct manipulation of bits within the memory. This power comes at a price, as the C programming languages tend to let the programmer do things that might be dangerous. A common problem is a buffer overrun, where the user input overflows the allocated memory, and run into another area which might also contain program variables. In the program acts and can compromise the program as it runs. In the case of HeartBleed, it was a buffer underflow which caused the problem, where a data array was meant to fill an area of memory, but the data to fill it with was non-existent.

The code that caused the Heartbleed problem is shown in Figure 4, where the variable payload is read from a Heartbeat request data packet, and then allocates an area of memory of this size, and puts the payload in there. If the payload size is the same as the payload value, it will exactly fill the allocated area. If not, it will overrun or underrun. For Heartbleed, the payload was zero, and the payload size was 16KB, which meant that OpenSSL tried to allocate 64K of memory, and put a zero length data payload into this memory. OpenSSL then lifts the filled memory from and sends it back. If no data was loaded, the data sent back will be whatever was in the running memory, such as usernames, passwords and private keys.

Network Traces

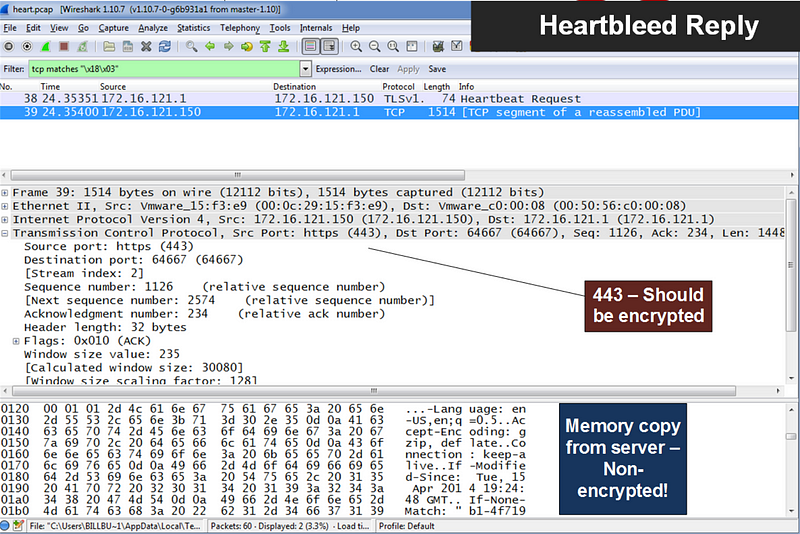

Within hours of the announcement, there was Python code which any script kiddie to point towards a Web site, and pick off the running memory on the system. The request was made up :

18 (Heartbeat Request)

03 02 (TLS 1.1)

00 00 (Length)

01 (Heartbeart Request)

40 00 (Payload length 16,384)

As we can see in Figure 5, there is no actual payload (even though the request says it is 16K long), and where Figure 6 shows a sample response. The return was then the contents of 64kB of memory, and which could contain passwords, user details and encryption keys.

The real victims were Yahoo, who’s site was used to illustrate the threat, and Agnes Adu Boateng, whose email address appeared in the dump from Yahoo’s email system, and which included her email address and blanked-out password (Figure 7). With a little bit of a Google search, you’ll find that Agnes is actually on the Members of Good Standing for the Chartered Institute of Taxation (Ghana) [here]. So for a few weeks, Agnes was a star of the Internet, and gave up, without her consent, her email address (and luckily not her password too). There’s no data on how many em users Yahoo lost, but there was a general panic at the time.

Whenever a security alert happens, the media roll-out the standard “security expert”, who then babbles through a whole range of scary things that often scare more than give useful advice. The standard advice for most things is, that if users are worried that someone has your details, go ahead and change your passwords, and you’ll scare any bad people away.

It happened with Heartbleed when a whole range of people (and companies) advised that users should change their passwords, which to the waiting script kiddies running their Python scripts was like shooting-fish-in-a-barrel. Whenever a password is changed, the old one will sit in the running memory, and the new one will appear … wow … two passwords for the price of one .. thank you security expert! Obviously, the advice should have been that users should contact their Web provider and ask if they had patched yet, and login and change their password once they got the all-clear.

s2n — Signal-to-noise

To overcome the problems caused by OpenSSL, Amazon created a new stack: s2n, with a core focus on improving TLS (Transport Layer Security) with a lighter weight approach. This follows Google’s release of BoringSSL and OpenBSD’s LibreSSL. Each have defined smaller and more stripped down versions that implement the basic functionality of SSL/TLS. Overall s2n uses only 6,000 lines of code, but, of course, this is likely to increase with new versions, as it is only a basic implementation.

Git hub version: here.

s2n is open source and hosted in GitHub allowing others to view and review the code, along with it being difficult to actually delete a project which is hosted there. Along with this, GitHub allows for a forking of the project, to support new features which the core version does not want to support.

What is interesting too, is that Amazon have generally taken security seriously, and will hopefully respond well to bugs found by the community, and not to take a kneejerk reaction (such as by Starbucks on someone finding a bug in their payment systems). Generally they have worked well with researchers and academics on new bugs.

Many problems, though, are still caused by SSLv3, and the new stack still supports it (but is disabled by default, as is the case for BoringSSL). LibreSSL has not supported SSLv3.

Problems, too, have been discovered in the random generator for the key generation (one for public and one for the private key), and s2n uses two separate random number generators, which many would struggle to see the advanced of this, but perhaps time will tell.

Demos

Demo of session details: https://www.youtube.com/watch?v=AXe4G3ttpTw

IDS Detection: https://www.youtube.com/watch?v=wPVtkYZf6M4

Network packet analysis: https://www.youtube.com/watch?v=IXhvLt3EX1k