A Little Cyber Puzzle …

A Little Cyber Puzzle …

We were interviewing a while back for a research post, and I asked the candidate — as a starting point for a deeper question — about the number of bytes that are typical used to store an integer on a computer. “Is it one?”, “Nope”, I said. “Is is none?”, “Definitely, not”, I said. “Well, I don’t know”.

To me, the core understanding of cybersecurity should start at the lowest level, and work up. If you don’t understand how data is actually stored and processed … with little-endians and big-endians, and in bits and bytes— then it’s equivalent to a bridge engineer not knowing about how the bolts holding a bridge together will actually work, or a medical doctor not knowing about how blood vessels work.

Our syllabus for cybersecurity should thus make sure there is a strong foundation in the understanding of how computers actually work, and how we convert bits into different representations and formats, and then operate on them. A core skill for all must be the strong understanding of machine level activity, and in bit-wise operations. But, for some reasons, the core skills in bit twiddling and the understanding how data is actually processed and stored is a little lacking in places.

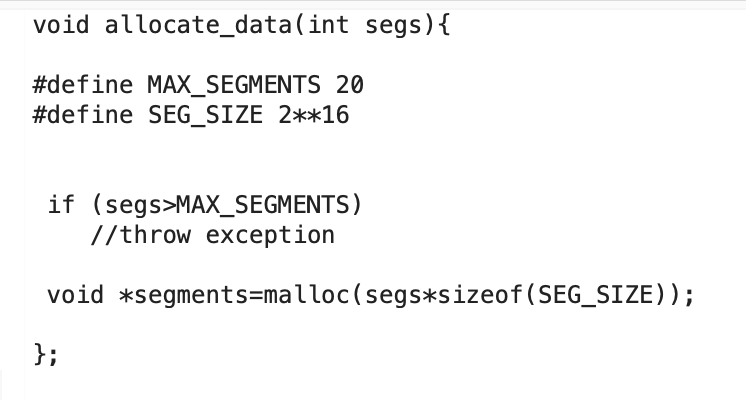

So, here is a little problem for you. In this code, we allocate in blocks of 64KB, and restrict the program to twenty 64KB blocks. But an intruder can easily exploit this code with a simple operation:

Can you work out the vulnerability?