Meet “Facebook Living Room” and “Facebook Town Square”

Meet “Facebook Living Room” and “Facebook Town Square”

Facebook and Google have grown without any real constraints, and their core business model is provide carrots— free on-line services — but where they write T&Cs which allow them to do basically anything they want with your data. They have tried lots of different business models for this, but they come back to the only one that really works … pushing customised advertisements to you.

And so there are a whole lot of marketing and advertising agencies sitting behind most of the things you do on-line, and quickly determining if they want you to be their next customer, and finding a hook. At the extreme end of the spectrum it can be used to push targeted political messages based on people’s interests, and at the other end, it is good old targeted advertisements. And the cloud service provider companies now want to know if that advert your clicked on, actually did lead to a sale (as the commission is often higher).

Google and Facebook thus both broke the isolated model of the Web a long time ago, and where your activity in one browser, will stimulate something in another browser. For this their perfect model is one-to-one marketing, and then to know you have followed up on an advertisement. But there’s a general kick-back against this approach, from both the EU (with GDPR) and from citizens. Consent, privacy and the control of the data that companies have on us must become a core parts of our on-line work.

Meeting in the town square and your living room

So how will Facebook cope with a respect for privacy and consent at one end of the spectrum, and all out advertising and income generation at the other end? Well, Mark Zuckerberg wants to keep it simple … he will split Facebook into two: A privacy-preserving Facebook (your living room — a private space) and a All-out Facebook (the town square — a public forum). With Facebook facing fines from many directions, it needs to take action soon, and it is believed that the split sites are being tested in Bolivia, Cambodia, Serbia, Slovakia and Sri Lanka. And so, the “living room” is where you meet your family, friends, and those your trust, and the “town square” is where you meet the world (and where advertisers will target you).

And for most, we say … “that’s how we have been living our lives on-line”.

But for law enforcement, this is not a good space, as it is the private things that they often want access to. And so many governments of the world will increasingly likely to put pressure on Facebook to open up its private spaces to law enforcement. And here we have the Catch-22 situation, as Facebook needs to build up trust in the encrypted spaces, but are also likely to be forced into providing backdoors. In the UK, for example, there are calls for the CEOs of social media companies to be responsible for the damage that they can cause to our society.

But what the point of having a privacy-focused entity?

For Facebook, there must be some business advantage in WhatApps, otherwise they will be running an infrastructure which does not bring income. Well, one model is to merge identities across the platforms — both for the “living room” and the “town square”, and where Facebook could observe and hear about the interactions in the town square, but could only see who you were speaking to in your living room, but not actually hear your conversations.

And so it has happened… Facebook now wants to rewrite the code around WhatsApp and integrate it with Instagram and Facebook Messenger. This is article is based on speculation, and there is currently no details of the methods that Facebook in integrated the packages. What is known is that Facebook bought WhatsApp for its customer base, but is stuck with end-to-end encryption and where it cannot mine user interactions.

It ends up with a Catch-22 situation. Users like the lack of adverts, but Facebook perhaps needs them in order to gain value from their investment, or at least want to share the meta data on their customers across the companies they own. At this point, there are no details on where in the tunnel that Facebook might punch into (or if it does at all).

The spin is that all three apps will come together onto a single messaging system — which makes sense — but this may give an opportunity for Facebook to rewrite the code and break into the tunnel. At the present time, end-to-end encryption is disabled by default on Facebook Messages — and enabled for each chat — and there is no feature like this on Instagram. The tension in not using proper end-to-end encryption is that Facebook would obviously like to know what people are talking about and thus their interests, along with government agencies being able to tap into communications. And so it could be a battle around user privacy.

Both the founders of WhatsApp and Instagram have left Facebook, with Brian Acton — a co-founder of WhatsApp — being particularly adverse to the business model of Facebook.

How could it be done?

A possible implementation is for WhatsApp to become less secure and the other two to improve their security, along with the metadata being shared across the applications. In this way, it would join all their users together, and share both the tunnelled encryption methods (and thus improve the security of Instagram and Facebook Messenger), while supporting possible cross sharing of user’s data. This would allow Facebook to paint a better picture as to who people actually communicated with, and cluster them for areas of interest.

So let’s say that Bob and Victor are Instagram users, Carol is a WhatsApp user and Alice is a Facebook user. Facebook could then merge their accounts into a common shared infrastructure and where they would have a single ID. Facebook could then be able to map users better across each of the platforms, but not be able to mine their messages:

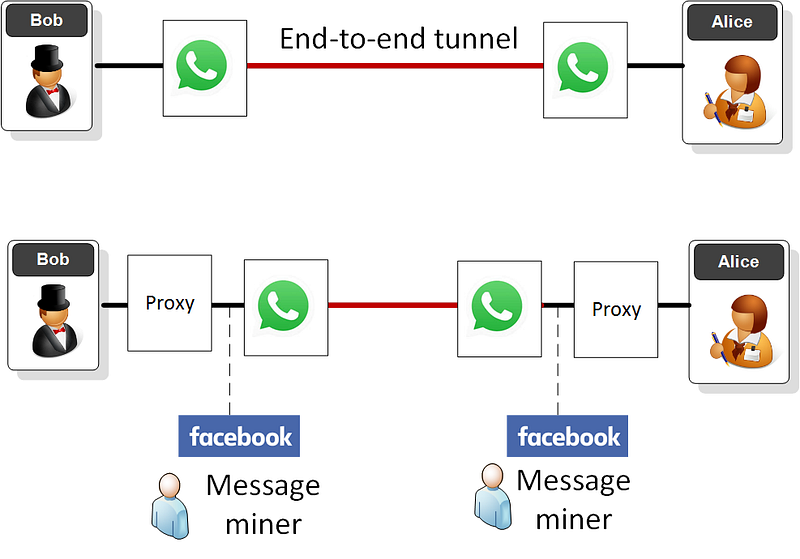

Currently, WhatsApp does a pure end-to-end tunnel, and where no-one can be in-between the communications, and where the encryption key is directly negotiated between Bob and Alice (using ECDH — Elliptic Curve Diffie-Hellman). A less pure system creates a proxy before the tunnel, and allows the application to mine the messages before and after the tunnel:

The proxy then allows for messages to be mined (and for law enforcement to tap into the communications). The best solution for Facebook — but an unlikely scenario — is for them to start to mine the communications of their users for keywords, and then feed advertisements to them either on the platform or through their other social media channels:

While this would be good for revenue for Facebook, it is unlikely that this will be implemented, as it would decrease user trust in Facebook, and possibly see them losing many users to Telegram. There is no way that Facebook could even consider the following:

In this way, Facebook either keeps a copy of the key that is created for the tunnel, and is able to mine into the conversion, or they create a “Facebook-in-the-middle”, and break the tunnel. These would also allow law enforcement to snoop on the communication. This method would be seen as a core breach of privacy laws and would have little chance of being implemented.

Do citizens trust Facebook?

With the Cambridge Analytica scandal, trust in Facebook is at an all-time low. Recently week, as part of a lecture, I asked students the level of trust they have in cloud service providers, and with a rating from 1 (no trust) to 10 (full trust). We can see that Apple and Google were the most trusted, with Facebook and Twitter trailing well behind:

This backs-up nearly every poll we have ever created around trust in these companies, and where Facebook is always the least trusted company in gathering data.

Facebook and GDPR

In 2017, a court ruled that Facebook could not transfer any WhatsApp data from its German users. The case started in Germany, after new T&Cs were pushed to users (August 2016), and where the Hamburg-based data-protection commissioner Johannes Caspar demanded that Facebook stop transferring WhatsApp data from German users to Facebook. He then defined that they should delete all the data sourced from German citizens that they had already gathered.

His argument was that users had not given their genuine consent for this data being harvested. After the ruling, Facebook cannot, at the present time, transfer any data from WhatsApp for its more than 35 million German users.

As a result, Facebook paused the transferring of WhatsApp data to Facebook across Europe and is speaking with European regulators. But while Facebook has paused the data transfer, Casper wanted the immediate deletion of all the data gathered from WhatsApp for German citizens.

Conclusions

So how will Facebook cope with running systems which are at two ends of the spectrum, and respect the rights of privacy and also the rights of society to protect itself? Mark addresses this by saying that they will respect the rights of the individual to privacy by applying strong encryption, but they also have responsibilities to society to protect against evil, and would have to work with law enforcement to prevent a range of threats. This, though, is a fine line to balance on, and the devil will be in the detail.