Remember The Days of EnCase … Now It’s All About Cloud, Buckets, Social Media and Slack?

Remember The Days of EnCase … Now It’s All About Cloud, Buckets, Social Media and Slack?

Investigating the Capital One data breach

Remember when digital investigations were all about archiving disks and running EnCase. Well, those days are rapidly going, and where there’s a need for a new breed of investigators and who understand Cloud Services, and Slack channel investigations. The latest breach of Capital One data gives an insight into the type of crime reporting than we may be seeing in the future. It pieces together traces around the Internet and then links them back to a target.

Some banks have moved to the Cloud quicker others. Capital One is one such bank and has defined that they will not use any data centres by 2020, and where they will host ALL of their data in the Cloud. But this move has perhaps caused them problems, and where the company is named in an indictment against an ex-Amazon.com employee and related to a data breach involving around 100 million people. This data is likely to include credit card details.

The core evidence appears to be that the information related to the breach has appeared on a GitHub associated with Paige A. Thompson:

and that an IP address used to make the postings to GitHub is from a pool of addresses that her VPN server uses:

The core of the breach notification seems to revolve around a responsible disclosure email to Capital One on 17 July 2019, and which identifies that a defined GitHub contains company records:

This seems to point to the leak coming for AWS S3 (Simple Storage Service) data buckets, and which are well-known places for data breaches. Amazon, though, has said that the breach wasn’t related to their services, but was due to an incorrectly configured firewall that caused a loophole to allow access:

Paige had worked with Amazon in 2016, although it looks as if there was no insider knowledge used.

The maximum penalty involved is five years in prison. Within the indictment there is an impressive amount of information on the scope of the technical detail involved. It thus outlines the S3 bucket commands used on 21 April 2019:

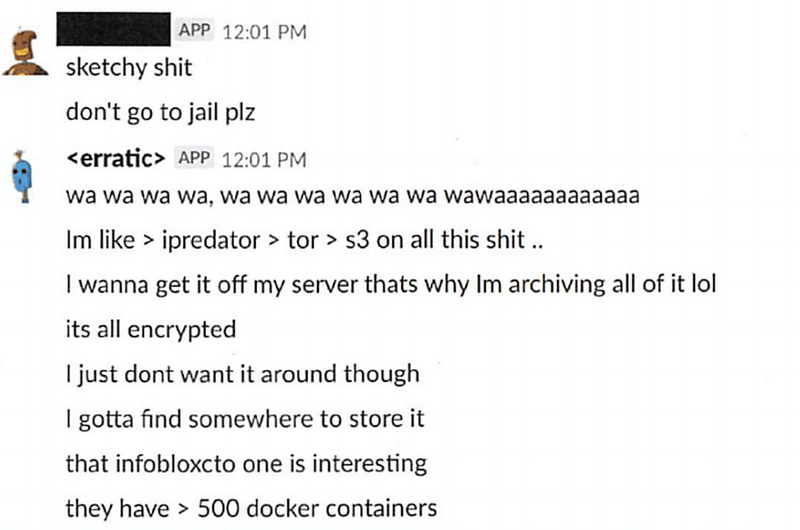

Within the evidence, there are details on the IP address used (42.246.x.x), and which are associated with both the accesses to the Capital One site, and also to the associated GitHub. The evidence also outlines the investigation of a Slack channel for which Paige (the erratic) was associated with, and where, on 26 June 2019, a person claimed to have the leaked files:

And, on 27 June, the “erratic” posted a panic message about getting off the site and finding a place to put the files:

Then the evidence trail turns to Twitter, and where some direct messages from the erratic outlining the gathering of the data buckets:

And then in a physical search, the FBI found evidence related to Capital One and “A Cloud Computing Company”:

The indictment perhaps shows where we are with cybersecurity, and that the days of investigating hard drives are reducing. Our criminal system thus needs to understand more about log files, Slack channels and social media, if it is going to understand complex investigations. You must worry, though, that sometimes the concept of an IP address and VPN server kinda goes over most people’s head.

What’s the defence?

The core weakness of AWS S3 buckets is well known, and has resulted in many data breaches. These buckets are easy to share, and provide a simple link to share files, but the protection on accesses is not well defined, or integrated into the general security/logging infrastructure of an organisation.

The core to protection against this type of attack is to log all accesses to sensitive areas, and alert when there is suspicion. Overall it looks like Capital One had logged the details of the accesses, but where they did not reacted to them at the time of the breach, and where they were only alerted through a responsible disclosure. Companies are thus increasing investing in 24x7 SOC (Security Operation Centres) and it is there that logs can be processed in real-time, and any suspicious activity is fed to human analysts. Anything related to S3 commands, especially, should have been alerted immediately to human analysts, especially where there are large files involved.

With defence-in-depth we have an approach with multiple layers of defence, from firewalls, to white-listed access lists for sensitive areas, the zoning of the infrastructure with different security levels, and ultimately to the encryption of the data itself.

An investment in defence-in-depth is always a good strategy, and especially in strong usage of firewalls and IDSs (Intrusion Detection Systems). An approach with uses the lowest privilege is key in many systems, and where a user must escalate up their privileges to gain access to sensitive information. For the most sensitive accesses, there may also be a human authorization involved. Tripwire systems, too can be used, and where sensitive file types are monitored for their accesses, and alerts are generated whenever these occur.

So:

- Don’t use S3 buckets for sensitive data.

- Get rid of role-based security, and implement attribute-based access control for rules which include User (ID, Role or Group), Resource (Data, Service and/or Service), Action (CRUD — Create, Read, Update and Delete) and Context (Location, Time and/or IP address).

- Encrypt the data at its source.

- Test your firewalls, and have multiple layers of defence.

- White-list accesses for IP address/geo-location/etc.

- Lock-down on IP addresses which violate access policies.

- Integrate identity access management into every part of the organisation, especially in supporting multi-factor and out-of-band authentication.

- Log both successful and unsuccessful accesses to systems and files.

- Invest heavily firewalls and IDS.

- Have multiple privileged levels, and start user on the minimal level required for their role and function. Higher access levels might require some human approval.

- Run a 24x7 SOC.

- Log everything and classify for threat level.

- Alert on accesses to sensitive areas.

- Zone your network with security levels, and firewall each zone.

- Support the lowest privilege for all accesses.

A rocky road ahead?

With companies facing increasing amount of fines for data breaches, compensation claims, and other associated losses, it is likely to be a difficult period for the company. In the first few hours of the announcement, Capital One’s share price fell by nearly 1.2 %:

In scope it is similar to the Equifax breach in 2017. This related to an unpatched vulnerability on their Web infrastructure that resulted in a breach of data for 147 million of its consumers. Equifax’s CEO at the time was Richard Smith, initially blamed his IT staff for falling to patch their systems, but the report reveals that their infrastructure was riddled with security problems and a general lack of investment in security.

Along with this, Equifax’s infrastructure suffered from many problems, including continual crashes, and incorrect results showing up in searches. The main failing in the breach was the failure to patch their Apache Struts infrastructure, even though a major vulnerability had been announced many months previously. In fact, their whole data architecture was creaking and used a near 50-year-old web infrastructure. The vulnerability allowed intruders to access Equifax’s data from a shell command and where they remained unnoticed for over two months. From the initial pivot point, they then had 256 accesses to the system and made over 9,000 data queries. In return they managed to gain access to an unencrypted file containing passwords and over 40 databases of unencrypted customer data.

Equifax was not able to detect the accesses as their network scanner had been idle for around 19 months, as it was inactive due to an expired certificate. It then took another two months for Equifax to update their expired certificate, after which the accesses were detected. The company took another two months to actually report the breach (before which some staff sold off their shares in the company). And so Equifax was hacked in September 2017, but failed to patch a vulnerability in Apache Struts 2 (CVE-2017–5638) and which was published in March 2017.

For Equifax the cost has been at least $1.6 billion, and where the company has had to invest over $1 billion in improving their security, and will have to pay $425 million to a Consumer Restitution Fund, along with a $175 million payment to several US states.

In 2016, Uber leaked the data of around 57 million users and of 3.7 million drivers. This included names, email addresses, and mobile phone numbers of users. In the end, Uber took over a year to actually report the breach, and actually initially paid the hackers $100,000 for a bug bounty reward.

It occurred when hackers were able to gain access to Uber’s GitHub account, and where they found the login account details for Uber’s AWS account. With these account details, the hackers then managed to offload 16 large files and which included passengers names, phone numbers, email addresses, and location details.

Uber put the blame on their chief security officer — Joe Sullivan — and who was replaced as part of the mitigation plan. As with Yahoo, the company announced the breach at a time when part of their company was being acquired. And so over the course of the months after the breach was announced, the valuation of Uber dropped from $69 billion to $48 billion. Uber lost a great deal of their reputation around the hack.I n the UK, the breach affected around three million UK-based users, and resulted in a £385,000 fine from the ICO, and while in the US they were fined $148m for not notify drivers about the breach.

In 2014, hackers managed to gain access to 83 million accounts within JP Morgan Chase, and which resulted in the company making significant investments in their IT infrastructure, and, as with Uber, they ended-up replaced their CSO. Within the hack, the hackers managed to get root access to more than 90 servers within the infrastructure, and managed to transfer funds and close accounts. It is thought that hack brought profits of over $100 million.

A number of customers were in the end impacted through financial fraud. The cost to JP Morgan Chase is not known, but the company has invested intensively in their security practices, and is likely to have cost several billion dollars. A recent estimate of JP Morgan Chase’s spending on security is $250 million per year.