I Started with KB … then MB … then GB … Now It’s PetaByte … Soon Exabyte … And Onto ZetaBytes ……

I Started with KB … then MB … then GB … Now It’s PetaByte … Soon Exabyte … And Onto ZetaBytes … And then YottaBytes

This year, we will create around 418 zettabytes of data — that’s 418 billion 1TB hard disks. And we are probably just at the start of the growth of data … in fact, we will probably have to talk about Yottabtyes in the near future.

We are becoming swamped with the ever-increasing demand for data storage. Soon we will struggle to find enough hard disks to support the growth in data. But there’s a solution, with DNA storage we could map 215 Petabytes (215 million GBs) onto a single gram of DNA. The Escherichia coli bacterium, for example, has a storage density of about 10¹⁹ bits per cubic centimetre. We could then store every bit of data for the world within a single room. Unlike data stored in an electronic form, such as in an SSD or HDD, which degrade over time, DNA stores data that will last for over one million years.

For me, I need to get used to Petabytes and Exabytes, and see GBs and TBs as in the same way I have done with KBs and MBs in the past. My PhD, for example, was based on a computer with 16MB of memory — with a 500MB hard disk — and it was a great step forward at the time. Its floppy disk could hold a massive 720KB. My first office PC had only 640KB on memory, and my first home computer — a ZX81 — has 1KB. You could even upgrade it with a “16KB” expansion pack:

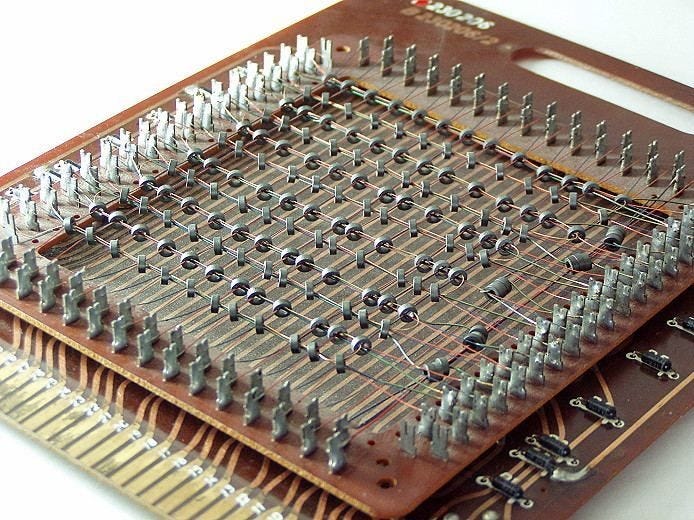

A few years ago I visited the National Air and Space Museum in Washington and the highlights of the trip were to see: the “1KB” of memory that was used to fly to the Moon; seeing the Cray 1 supercomputer (which you could sit on and had a massive amount of memory — 1MB); and to see a magnetic core memory storage device:

This wonderful storage device did not store a great deal of information, but the data stored can be preserved for centuries. Like it or not, the memory that we currently use will decade over the years, and is unlikely to be read within decades or centuries.

With the move from magnetic disk systems to static memory has improved access times, but the physical space we require for the storage is still fairly large, and we are struggling to keep up with the demand for data storage. At present my One Drive can store 10TB, so just imagine that every person on the planet used up 10TB? In Scotland we would need:

5,000,000,000,000,000,000 Bytes

I have taught KB, MB, GB, and TB. But I struggle above that. Well 1o¹² is 1 TB, and 10¹⁵ is 1 PetaByte, and 10¹⁸ is 1 Extrabyte. So Scotland would need 5,000,000 TetaBytes, or 5,000 PetaBytes, or 5 ExtraBytes. Imagine if we scaled across the World and the amount of data that we would need.

Every aspect of our lives is increasing its demand for data, for example, Intel predicts that there will be 152 million connected cars by 2020, generating over 11 Petabytes of data every year — that’s over 40,000 250GB hard disks full of information. With the intelligent car, we also see a statement of:

The Intelligent Car … (Almost) as Smart as You

where we will see cars analysing and sharing a whole range of data in order to make decisions on the optimal performance of the driver and the vehicle. The statement of “.. as Smart as You” seems to hint that you’re not quite as good a driver as you could be!

So how can we store large amounts of data, and make it robust against large-scale devastation?

Big Big Big Data

We are moving into an era of Big Data, and there’s an increasing demand for it. But how can we scale and create massive amounts of data which are both dense in their storage, but also be robust against large-scale data storage failure (such as on a devastating electrical power outage)? It uses oligonucleotides which are short nucleic acid polymers.

DNA storage stores digital data in the form of a DNA base sequence. It leads to much denser storage of data. Some say that it could be used to store data which could be recovered in a disaster situation, and where future generations will be able to read the archived DNA sequences. It is, though, slow, as there is DNA sequencing involved for large amounts of data.

It was proposed by Mikhail Neiman in 1964, who stored the data on a device named MNeimON (Mikhail Neiman OligoNucleotides). Since then George Church at Harvard University, in 2012, estimated they could store 5.5 petabits (700TB) on a cubic millimetre of DNA.

In 2013, the EBI (European Bioinformatics Institute) stored five million bits, including text and audio files, on a speck of dust. It included all of Shakespeare’s sonnets and the audio from Martin Luther King’s famous speech (“I Have a Dream”). It advanced research by encoding a strong error correction method using overlapping short oligonucleotides, and where the sequences were repeated four times (with two of them written backwards).

In order to improve the robustness of the storage, researchers have recently added the Reed-Solomon error correction code, which is used on CD-ROMs to correct errors. With this, the researchers predicted the DNA could be encapsulated in silica glass spheres and could be preserved for one million years for -18°C. The current cost for the storage was quoted at $500/MB.

So what?

Well, just for you, I’ve coded a DNA encoder and decoder:

With the nucleic acid sequence there is a sequence of letters that indicate the order of nucleotides within a DNA…asecuritysite.com

With the nucleic acid sequence, there is a sequence of letters that indicate the order of nucleotides within the DNA. These are ‘G’, ‘A’, ‘C’, and ‘T’. The storage of data is thus stored as strands of synthesized DNA that stored 96 bits, each using the bases (TGAC) defining a binary value (T and G = 1, A and C = 0). When reading the data, it is sequenced — as we would with the human genome — and then convert the TGAC bases into binary. Each strand has a 19-bit address on the start of the block.

For example, the DNA sequence, from the program above, for “Big Data” gives:

TGTCGCGCTACAGTGTGCTGCAGTACTCTGACATCGAGATACG

TACGTACGTACGTACGTACGTACGTACGAGAG

Conclusion

The demand for data increases, where we are moving into storing every single element of our lives, and where few things are deleted. DNA storage offers one method for storing massive amounts of data on a speck of dust, and where it could be preserved for millions of years. It would be interesting to dig out all those floppy disks from the past and see if they have managed to hold onto the data that they once held.

If you are interested in the method, you can view here: