Peer Review … Flawed, But What’s Better?

Peer Review … Flawed, But What’s Better?

Last week, in the UK, the REF (Research Excellence Framework) 2021 results were published, and where the key metrics for academic research were mainly built around published work in research journals and conferences. For some in academia, Daniel J Bernstein (djb) perhaps encapsulates the importance of publishing research work:

Along with this, published work often provides the foundation of PhD work and in the development of research careers for ECRs (Early Career Researchers). Also, when applying for academic posts, h-index and i10-index metrics are often used as a basic metric for the “quality” and “quantity” of someone’s research. A basic measure of the quality of an academic at one institution and another can come down to the number and scale of the citation metrics of their published work. And, the places that someone publishes is often assessed to, and where higher quality venues are often worth much more than those which are weaker. Unfortunately there are some venues, which will publish anything that is submitted, as long as someone pays their registration fee.

Few people in outside academia would have such crude (and public) measures on their career progress. Imagine if a software development had a public page which defined the number of times their code was used by others outside their companies.

The peer review process

But, the peer review process often stands in the way of research aspirations, and which is there to make sure that only good quality work gets through. It is also required to create and sustain trusted sources of dissemination. A good conference should publish work which is novel, rigorous, and interesting. A poor conference will publish work which cannot be trusted for these key attributes.

But, the peer-review process for research papers is (or can be) flawed, and many people involved in the process know it. For a reviewer, it is mainly an unpaid task, and which can take many hours of intensive reading and understanding — and often includes those probably already have high workload levels. It can also be a delegated task to less experienced researcher, such as for a Professor passing on the review to their PhD student.

But what’s the alteratives?

Research paper metrics

For research work to be published, a paper will go through various stages of review before it can be accepted for publication. Now, a new paper sheds light on the key characteristics that will get a paper published, or not:

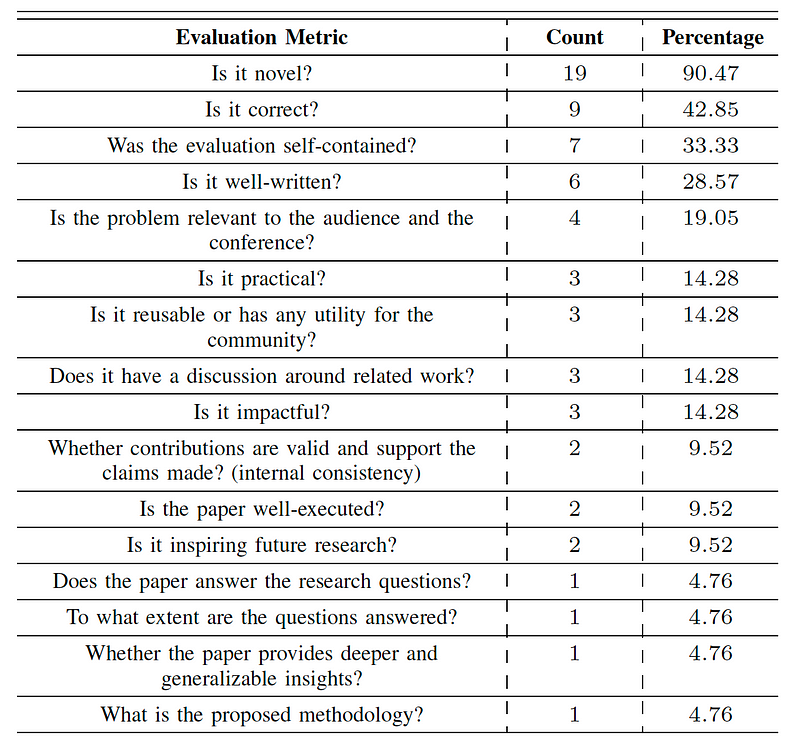

In the paper, a number of reviewers were interested, and on the question of the metrics that they used, it was novelty, being correct, with a self-contained evaluation, and whether it was well written were the key elements for success:

We can see that the novelty aspect is way out in front as the main reason for success. Within security, the definition of what’s novelty is obviously fairly vague. One quote highlights one approach:

“What’s the related work, who solves similar problems in the same or possibly different domains, have the authors talked about those other papers sufficiently? Is the nuance and difference between them of sufficient delta?”

and in the need for well-written and well-structured papers:

“Something that is well-written, well-structured, has good flow, can prepare the reader for what is coming next, can help the reader ask the right questions or provide the answers. This is always very welcome”

and in conferences with low acceptance rates, that reviewers perhaps can look for reasons to reject:

“We know that the acceptance rate is so low (at these conferences) that sometimes there can be a tendency from the reviewer side to look for reasons to reject instead of reasons for accepting a paper.”

In terms of things that researchers should avoid, the paper includes common statements on the work, including reinventing a known problem and a trivial advancement. A key pointer, though, is that the paper goes into the weaknesses of each section, and where the introduction does not underline contributions or conceptual ideas:

When it came to core statements around the claims in the paper, the research team found that over-claiming or having incorrect claims was one pointer, along with not backing-up claims:

And, the writing quality is also fundamental in the eyes of reviews. This includes bad grammar, poor figures, and bad writing.

When it came to conference Programme Committees (PCs), the paper outlines the key objectives of:

- Accept papers of quality.

- Provide constructive feedback.

- Evaluate correctness, novelty, and validity.

- Review and advocate papers fairly.

- Help shape the best program.

When it comes to feedback on papers, the paper outlines:

- Providing constructive and actionable feedback.

- Being detailed and informative.

- Being comprehensive and well-structured.

- Being clear and carefully written.

- Being objective.

- Including a paper summary.

- Being anonymous.

Some conferences now provide for rolling submissions, and where there can be a dialogue between reviewers and authors. While this is positive for authors, it is not as well received by reviewers, as it may generally increase their workload.

Well … if you are into research … go read the paper here.