To Scale To The Future: Goodbye SQL?

To Scale To The Future: Goodbye SQL?

Say hello to NoSQL

SQL (Structured Query Language) is one of the oldest programming languages and has done us well. It was created in 1974 and is more than 49 years old! But, it is rather fixed in its ways and does not cope well with newer data object formats, such as JSON.

And, so we see the rise in the NoSQL databases, and where we can create JSON objects where we can add new attributes to our records. One of the most popular is DynamoDB, and hosted in Amazon AWS. It provides simple API integration with a range of programming languages, including .NET, Python, and Golang, while also supporting command-line scripting. And, best of all, it is managed by Amazon, so no need for special servers and software.

Relational v Non-Relational databases

With a relational database, we have a fixed schema, and where records are stored in rows and columns. We then use SQL to query the records. In a non-relational mode, we can have a dynamic schema and use key-value pairs:

The great advantage is that we can easily scale our non-relational databases with new schemas. There is thus less checking going on, so we need to watch that we are using the correct names for key values. In DynamoDB, we can have an almost unlimited amount of data storage, and where our attributes for data objects can differ.

For a more traditional database, AWS offers the Amazon Relational Database Service (Amazon RDS), Amazon Redshift, and Amazon Aurora. RDS is used for relational databases, and where there are complex transactions or complex queries. They have a medium to high query or write rate, and supported high durability. The database types supported are Amazon Aurora, PostgreSQL, MySQL, MariaDB, Oracle, and Microsoft SQL Server.

Amazon Aurora is an enterprise-class relational database and is compatible with MySQL and PostgreSQL. Overall, it automates key tasks such as provisioning, backup, recovery, and failure detection. Amazon Redshift has applications into Big data applications, including those related to enterprise data warehouses (EDWs). It has a core focus on the data, and less on database management.

DynamoDB

DynamoDB is particularly good at scale, and can support TBs of data, which has a predictable amount of latency. It also fits in well with AWS Lambda for serverless computing. With this, we can trigger the scripts, such as by receiving an email message. DynamoDB also likes data sets that are fairly simple in their structure and uses a key-value structure. This makes it easy to create queries.

Overall, the DynamoDB we have two keys:

- Partition key (single key). As a database grows, the partition key is used to segment it into logical blocks. For example, we might partition based on a user name, and then have data objects associated with each user.

- Partition and sort key (compound key). The addition of the sort key allows us to structure the data objects with the addition of a sort key. For example, a user name may have one or more matriculation numbers. We can then use the matriculation number as a sort key to quickly find data related to the compound key.

Programming with DynamoDB

So let’s do a simple worked example of using DynamoDB.

Creating a DynamoDB database in Python

We can first create our database named “students” with:

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.create_table(

TableName='students',

KeySchema=[

{

'AttributeName': 'username',

'KeyType': 'HASH'

},

{

'AttributeName': 'matric',

'KeyType': 'RANGE'

}

],

AttributeDefinitions=[

{

'AttributeName': 'username',

'AttributeType': 'S'

},

{

'AttributeName': 'matric',

'AttributeType': 'S'

},

],

ProvisionedThroughput={

'ReadCapacityUnits': 5,

'WriteCapacityUnits': 5

}

)To create and run the script from the command line:

$ nano db01.py

$ python db01.pyThis has a partition key of “username” and a search key of “matric”. We can then go to the AWS Management Console and view our newly created database:

Adding records

Next, we can add a few records to the database:

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

table.put_item(

Item={

'username': 'fsmith',

'matric': '01112345',

'first_name': 'Frank',

'last_name': 'Smith',

'age': 25,

'account_type': 'MSc in Security',

}

)

table.put_item(

Item={

'username': 'bsmith',

'matric': '01112346',

'first_name': 'Bert',

'last_name': 'Smyth',

'age': 31,

'account_type': 'MSc in Databases',

}

)

print(table.creation_date_time)

print(table)This will add two records into the database, and using the keys of username and matric:

$ nano db02.py

$ python db02.py

2023-01-15 00:26:34.361000-08:00

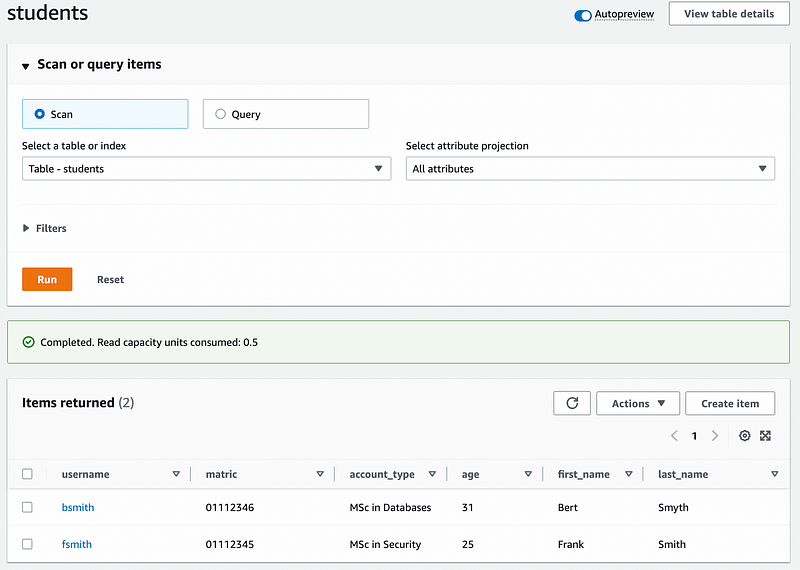

dynamodb.Table(name='students')If we now go back to your AWS Management Console, we can then list the items in the database (by selecting the table, and then “List items”:

By clicking on the record, we can then view the form view. Notice that new fields have been automatically added and that first_name is defined as a string (“S”), and age as a number value (“N”):

We can also view this in a JSON format:

The JSON for one of the records is then:

{

"username": {

"S": "bsmith"

},

"matric": {

"S": "01112346"

},

"account_type": {

"S": "MSc in Databases"

},

"age": {

"N": "31"

},

"first_name": {

"S": "Bert"

},

"last_name": {

"S": "Smyth"

}

}Performing a search with a key

We can now perform a search on the database with:

import boto3

from boto3.dynamodb.conditions import Key, Attr

# Get the service resource.

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

response = table.query(

KeyConditionExpression=Key('username').eq('fsmith')

)

items = response['Items']

print(items)In this case, we are searching for a username of “fsmith”:

$ python db03.py

[{'last_name': 'Smith', 'first_name': 'Frank', 'username': 'fsmith',

'account_type': 'MSc in Security', 'matric': '01112345', 'age': Decimal('25')}]Next, we can perform a search on the AWS Management Console by selecting “Explore table items”:

We can then perform the same search as previously from Python on the AWS Management Console:

Searching for attributes in Python

Our keys are unique search values, but we can also search for the attributes of the records:

import boto3

from boto3.dynamodb.conditions import Attr

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

response = table.scan(

FilterExpression=Attr('age').lt(27)

)

items = response['Items']

print(items)In this case, we search for an age less than 27. A sample run gives:

$ python db05.py

[{'last_name': 'Smith', 'first_name': 'Frank', 'username': 'fsmith',

'account_type': 'MSc in Security', 'matric': '01112345', 'age': Decimal('25')}]We can modify the search with logical operations on the attributes. In the following we search where the last name begins with an “S” and where the account type starts with “MSc”:

import boto3

from boto3.dynamodb.conditions import Attr

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

response = table.scan(

FilterExpression=Attr('last_name').begins_with('S') & Attr('account_type').begins_with('MSc')

)

items = response['Items']

print(items)The matches we can then include chained conditions with & (and), | (or), and ~ (not). and for attributes that match lt (less than), lte (less than or equal to) and ge (greater than):

A sample run gives:

$ python db08.py

[{'last_name': 'Smyth', 'first_name': 'Bert', 'username': 'bsmith',

'account_type': 'MSc in Databases', 'matric': '01112346', 'age': Decimal('31')}, {'last_name': 'Smith', 'first_name': 'Frank', 'username': 'fsmith', 'account_type': 'MSc in Security', 'matric': '01112345', 'age': Decimal('26')}]Generally, we using boto3.dynamodb.conditions.Key when the condition relates to a key of the item and boto3.dynamodb.conditions.Attr for the attribute of the item.

Getting an item in Python

With our key values, we can locate a data record using the get_item() method. In the following, we get the record for “bsmith”:

import boto3

from boto3.dynamodb.conditions import Key, Attr

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

response = table.get_item(

Key={

'username': 'bsmith',

'matric': '01112346'

}

)

item = response['Item']

print(item)When we run it, we get:

$ nano db07.py

$ python db07.py

{'last_name': 'Smyth', 'first_name': 'Bert', 'username': 'bsmith', 'account_type': 'MSc in Databases', 'matric': '01112346', 'age': Decimal('31')}Updating an item in Python

Often we have to update records. With Python, we can use the update_item() methods with the attribute that we want to change. In this case, we will update the record related to “fsmith” for the age of 26:

import boto3

from boto3.dynamodb.conditions import Key, Attr

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

table.update_item(

Key={

'username': 'fsmith',

'matric': '01112345'

},

UpdateExpression='SET age = :val1',

ExpressionAttributeValues={

':val1': 26

}

)

response = table.get_item(

Key={

'username': 'fsmith',

'matric': '01112345'

}

)

item = response['Item']

print(item)If we run from the command line interface, we can see that the record has been updated:

$ python db06.py

{'last_name': 'Smith', 'first_name': 'Frank', 'username': 'fsmith',

'account_type': 'MSc in Security', 'matric': '01112345', 'age': Decimal('26')}We can then check this by going back to the AWS Management Console, where we see the updated age value:

Listing databases

We can use Python to determine the names of the databases we have in our account:

import boto3

dynamodb = boto3.resource('dynamodb')

tables = list(dynamodb.tables.all())

print(tables)If we run from the command line, we get:

$ python db04.py

[dynamodb.Table(name='students')]Creating a database from CLI

Along with configuring through APIs, we can configure using the AWS command line:

$ aws dynamodb create-table \

--table-name students2 \

--attribute-definitions \

AttributeName=username,AttributeType=S \

AttributeName=matric,AttributeType=S \

--key-schema \

AttributeName=username,KeyType=HASH \

AttributeName=matric,KeyType=RANGE \

--provisioned-throughput \

ReadCapacityUnits=10,WriteCapacityUnits=5This creates the same database schema as before and creates as “students2”. We can now either run our Pythong script to view the database or go to the AWS Management console:

We can then list our database in the Command Line Interface (CLI):

$ aws dynamodb list-tables

{

"TableNames": [

"students",

"students2"

]

}To get items, we can use the command line of:

$ aws dynamodb get-item --consistent-read --table-name students

--key '{ "username": {"S": "fsmith"}, "matric": {"S": "01112345"}}'

{

"Item": {

"last_name": {

"S": "Smith"

},

"first_name": {

"S": "Frank"

},

"username": {

"S": "fsmith"

},

"account_type": {

"S": "MSc in Security"

},

"matric": {

"S": "01112345"

},

"age": {

"N": "26"

}

}

}To add items to the database we can use the — put-item option and specify the JSON string for the record. For example to add a new user:

aws dynamodb put-item --table-name students

--item '{"username": {"S": "csmith"}, "matric": {"S": "01112347"},

"first_name": {"S": "Colin"},

"last_name": {"S": "Smiths"},"age": {"N":"42"},

"account_type": {"S":"MSc in Databases"}}'If we now list our database in the AWS Management Console we see the new record:

We can use the update-item option to change attributes. In the following, we change the user’s last name to “Smithes”, and then show the record that has been updated:

$ aws dynamodb update-item --table-name students

--key '{"username": {"S": "csmith"}, "matric": {"S": "01112347"}}'

--update-expression "SET last_name = :newval"

--expression-attribute-values '{":newval":{"S":"Smithes"}}'

--return-values ALL_NEW

{

"Attributes": {

"last_name": {

"S": "Smithes"

},

"first_name": {

"S": "Colin"

},

"username": {

"S": "csmith"

},

"account_type": {

"S": "MSc in Databases"

},

"matric": {

"S": "01112347"

},

"age": {

"N": "42"

}

}

}If we now list our database in the AWS Management Console we see the updated record:

Deleting the database

Finally, we can delete our database with the delete() method:

import boto3

dynamodb = boto3.resource('dynamodb')

table = dynamodb.Table('students')

table.delete()In the CLI, we can use:

$ aws dynamodb delete-table --table-name studentsThere is no question that SQL is on the way out. It is fixed and doesn’t cope well with new fields. So, say hello to NoSQL.