AI Detectors: The Ethical Dilemma of Bias Towards Native English Speakers

AI Detectors: The Ethical Dilemma of Bias Towards Native English Speakers

And, so, academia is faced with one of its greatest challenges, ever!

The rise of generative language models, such as ChatGPT, shows no bounds of what it can achieve. With plagiarism, we built up tools that could match words from other content, but with AI, the bot already contains the reordering of words to overcome detection. Basically, humans also follow this method, and often copy and paste, and rephrase. And, so, AI bots are just doing what humans did in writing essays, but they are actually quite good at setting out the answer in a logical way.

Bais against non-native English writers

In a new paper [1], the research team investigated writing samples for native and non-native English writers and found that AI detectors often classified the world of non-native English writers as AI-generated.

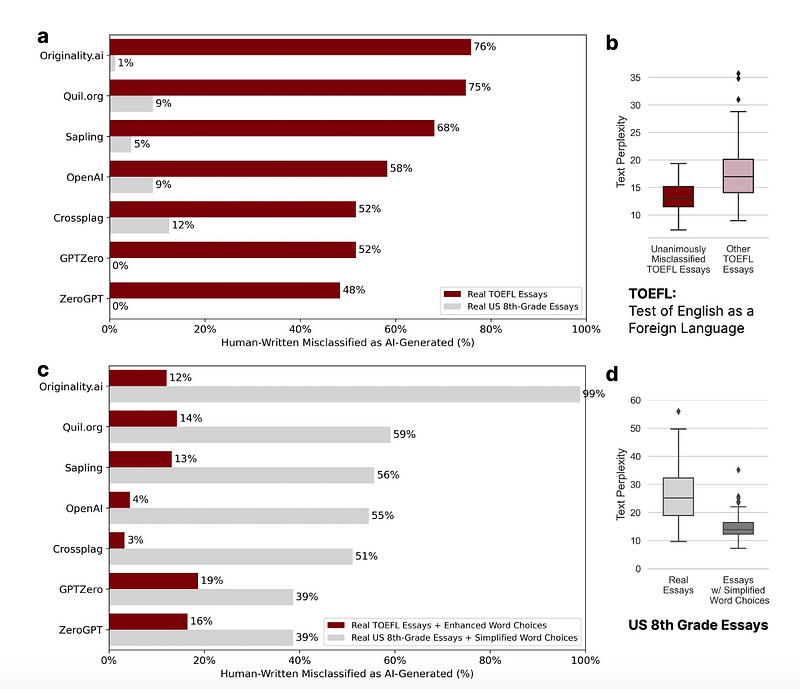

The native English writers were often correctly identified as not being generated by AI. We see in Figure 1, the researchers used tools such as Orginality.ai, Qull.org, Sapling and OpenAI to identify human-written work. It can be seen from (a) that more than half of the non-native-authored TOEFL (Test of English as a Foreign Language) was incorrectly identified as “AI-generated,” but this is much lower for US 8-th grade essays. Originality.ai has a 76% misclassification rate for non-native English speakers and only a 1% rate for native English speakers.

Overcoming bias

In Figure 1 (c), the ChatGPT prompt of “Enhance the word choices to sound more like that of a native speaker” is selected, and we see that it reduces the misclassification of AI-generated text (the red bar), but where: “Enhance the word choices to sound more like that of a native speaker.”, significantly increases misclassification (the grey bar).

The research team then looked at US College Admission essays and Scientific abstracts (Figure 2). Again the red bar shows that there were high levels of false positives for no ChatGPT prompt, but this was significantly reduced when the “Elevate the provided text by employing literary language” prompt was used. With (c), we see that the prompt of “Elevate the provided text by employing advanced technical language”) leads to a reduction in detection rates.

Conclusions

This is a time of change. The usage of AI tools to detect AI generation is not a well-defined science at the current time. For academics to say that “You are only cheating yourself”, is not an answer to the significance of the issues we face in assessing student work.

Reference

[1] Liang, W., Yuksekgonul, M., Mao, Y., Wu, E., & Zou, J. (2023). GPT detectors are biased against non-native English writers. arXiv preprint arXiv:2304.02819.

Subscribe: https://billatnapier.medium.com/membership