GPT-4 Is The Perfect Student: Will Education As We Know It, Come Crumbling Down?

GPT-4 Is The Perfect Student: Will Education As We Know It, Come Crumbling Down?

Please note that this blog relates to a paper which has not been peer reviewed [1]. A review of the paper is here.

And, so, we all know that the days of machines generating text which is poorly defined is gone. With Chat-GPT we get almost undetectable machine-written text. We also know that it is good at creating computer code. But, surely maths and engineering are problem areas, and where the machine will struggle to interpret maths problems?

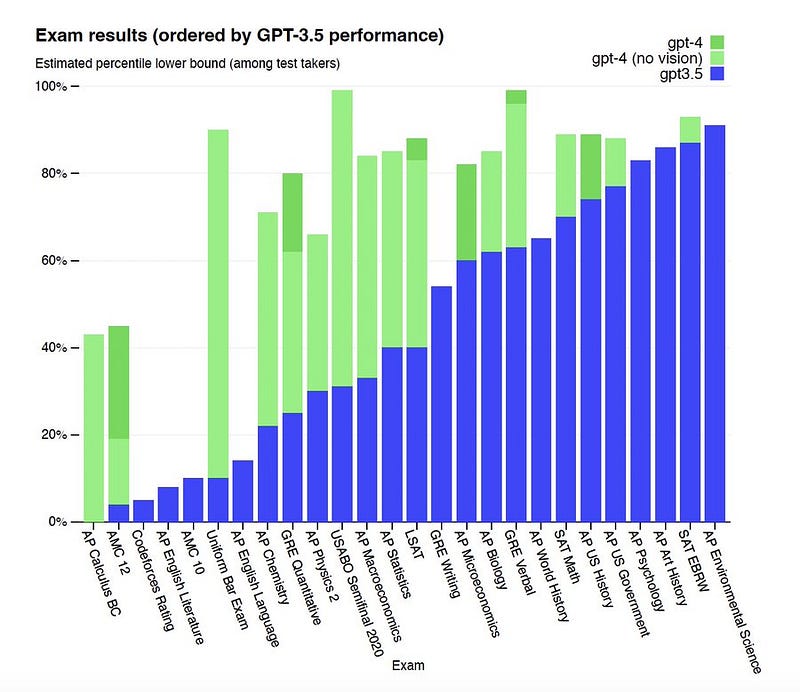

Recent research work has shown that GPT-4 significantly improves its performance in areas of image detection and maths [here]:

Well, new research shows that GPT-4 now is able to correctly determine all of the answers to maths and engineering problems at an undergraduate level [here]:

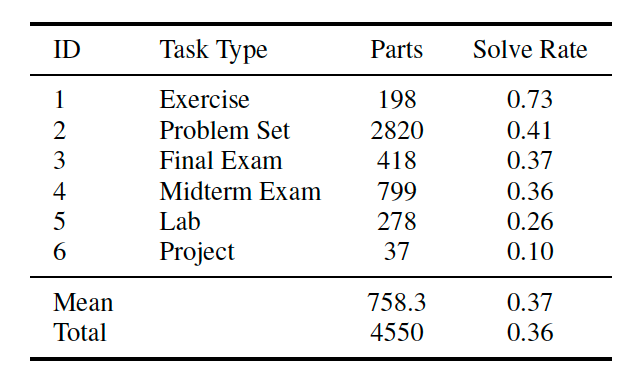

For this, the research team took 4,550 questions and solutions from Electrical Engineering and Computer Science (EECS) modules and found that GPT-3.5 solved around one-third of the questions, while GPT-4 achieved a perfect score. Overall, their research is focused on seeing how GPT-4 could enhance Mathematics and EECS education.

The assessment papers were defined in terms of level (0–3), the number of questions, and the number of parts:

With GPT-3.5, the solve rate varied on whether it was an exercise (that was not assessed), a final exam, lab work, and project work. Generally, exercises were easier to solve, while lab and project work were more difficult:

and generally, too, a programming solution had the highest success rate, while questions posed as images had the weakest:

These results can be illustrated as:

With GPT-3.5, the success rate varied from 93% correct (Introduction to CS Programming with Python) to 6% (Signal Processing):

In Column A we see the number of questions in each assessment, and in B we see the solve rate:

It can be seen that areas of differential equations, more advanced electrical circuits, signal progressing and applied maths had a relatively low success rate, while introductory subjects tended to have a high success rate.

The research team then looked at GPT-4, and found that its ability to solve and abstract maths problems allowed for a near-perfect score for the solutions to every subject:

Conclusions

We can’t stop the rise of AI in education, but it is obvious that we now need to fundamentally change our approaches to assessment, otherwise, we will get perfect answers every time. Without understanding its strength, we could create a generation of graduates who know little about core fundamental knowledge, and can basically just enter GPT commands. This becomes a dehumanised world, where our kids just turn automatically to the machine for answers.

Reference

[1] Sarah J. Zhang, Samuel Florin, Ariel N. Lee, Eamon Niknafs, Andrei Marginean, Annie Wang, Keith Tyser, Zad Chin, Yann Hicke, Nikhil Singh, Madeleine Udell, Yoon Kim, Tonio Buonassisi, Armando Solar-Lezama, Iddo Drori, Exploring the MIT Mathematics and EECS Curriculum Using Large Language Models. https://arxiv.org/abs/2306.08997