Web Security: 16 million web sites are wide open to compromise?

Web Security: 16 million web sites are wide open to compromise?

Like it or not, the world is moving towards the Web for its applications, data and processing. But is it a secure world? On simple estimates I think more than 16 million web sites could be wide open to FREAK, DROWN and many other threats. These sites are often unpatched, and badly configured, and can leak one of the most precious of all things on the Internet: an organisation’s private key.

The costs of losing that key for a large organisation is estimated to be greater $100 million in damages. So protecting your private key, and patching, is a good thing. Along with this, there are fines under GDPR and NIS. Ask many Cyber Security professionals about the thing that would keep them awake at night, and the loss of private keys would be right up there.

So you can shout things from the rooftops, but whether anyone hears you depends on whether they are listening. An organisation who does not even get the basics right for security really needs to have a look at itself, and understand how they take their risks serious — and that really ends up at the CEO’s door. From a simple scan of public sector sites, that I undertook, the state of the security is not good, and here is an example of a public sector site that is currently running:

Why should my CEO be worried?

If your CEO tells you that SSL and TLS are just for geeks, tell them that a breach of a trust infrastructure — the compromising of private keys — is the No. 1 most significant security threat for many cyber security professionals , and is one of the most expensive (and embarrassing) things to be faced with. A single loss of a key can create fake sites, properly signed emails, spying on network traffic, and even fake software updates, along with the possible access to customer data … and much more. And the worse thing, is that you are unlikely to ever know about the full scope of a breach.

Venafi recently surveyed 500 CIOs and found that one of the greatest holes in security in their organisations is related to encryption keys and digital certificates. Their report outlines that around half of network attacks come in through SSL/TLS, and this figure is only likely to increase. Along with this almost 90% thought that their company was defenseless against tunneled attack, as they cannot inspect the traffic, and around the same number said that they had suffered from an attack using encryption to hide the attack.

They found also that 86% of CIOs think that stealing encryption keys and digital certificates is a significant threat to their organisation, and 79% feel that innovation is being held back because of the trust issues with encryption keys.

Ponemon, too, undertook some research into failures in control and trust, and surveyed over 2,400 companies from the Global 2000 in Australia, France, Germany, the UK and the US, and found that attacks on trust could lead to every organisation losing up to $400 million. With this they identified that one of the major weaknesses in enterprise security is often the lack of management over encryption keys and certificates.

So let’s dip into SSL Labs stats, and analyse the state of the Internet. As the core of the problem is often four things: support for SSL (Secure Socket Layer) v2; legacy “export” cipher suites (caused by the original export restriction from the US on the early version of SSL); OpenSSL bugs; and the Bleichenbacher’s attack. I have provided an outline of some of the methods after the main analysis sections.

An A grade or F?

Sites gain a grading based on their vulnerabilities and the cryptography they support. The majority get an “A” grade (with some even getting an “A+” grade), and which means they are well patched and have good support for security. A “B” grade is a must to better, and normally the site has just a few vulnerabilities. A “C” grade means there quite a few things which are insecure — a “not quite good enough, you must try better” grade. An “F” grade (9.8%), though, is terrible — a “you need to take the whole course again” grade. The site has such risks that it should probably be shut off immediately (and patched) and the administrators sent on a Web security/crypto course. Remember that supporting HTTPs usually means that the site is being maintained in some way.

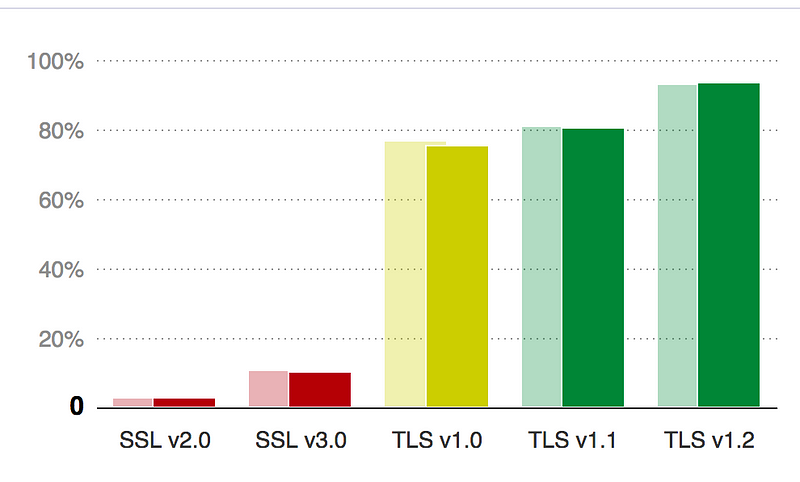

Surely no-one supports SSL v2?

For SSL/TLS support, organisations must NEVER EVER support SSL 2.0, but still 2.6% of sites have it enabled. Around 10% of the sites surveyed, too, still support SSL v3, and which also opens up many security weaknesses.

This means that around 1-in-40 sites still support SSL v2, and are wide open for attack. As an estimate, in 2014 there around 644 million web sites online in the world, and this would mean that around 16 million were wide open to FREAK, DROWN and many other threats. The number of Web sites in the world is now estimated to be well over 1 billion (and increases almost exponentially each year), so this estimate is probably on the low side.

To protect against a range of vulnerabilities, sites should support TLS 1.0 and above (and migrate away from TLS 1.0 support).

The loss of SSL v2 might limit someone with an original version of Netscape or Internet Explorer, and which is unpatched on an unpatched Windows XP machine. But, basically someone with this setup, should not be connecting to any network. Few browsers and applications, too, need SSL v3, and if they do, they should be upgraded to use TLS.

Stopping a long term breach — Forward secrecy (FS)

An important concept within key exchange the usage of forward secrecy (FS), which means that a comprise of the long-term keys will not compromise any previous session keys. For example if we send the public key of the server to the client, and then the client sends back a session key for the connection which is encrypted with the public key of the server, then the server will then decrypt this and determine the session.

A leakage of the public key of the server would cause all the sessions which used this specific public key to be compromised. FS thus aims to overcome this by making sure that all the sessions keys could not be compromised, even though the long-term key was compromised. The problem with using the RSA method to pass keys is that a breach of the long keys would breach the keys previously used for secure communications.

For FS we see that around half of the sites support it, the other half supported other methods. While the trend is good, there is still room for improvement, but the upgrade to TLS 1.3 should improve this figure. Overall a breach of the private key of a site could cause massive problems for all the sites which were derived from it, so sites (and browsers) need to move towards using FS. A disappointing 4.5% of Web sites scanned did not support FS in any way, and this is a figure which really needs to move toward zero.

Sites needs to support TLS 1.2, at least, and also support TLS 1.3 in order to improve forward secrecy, and limit the scope of a break of the private key.

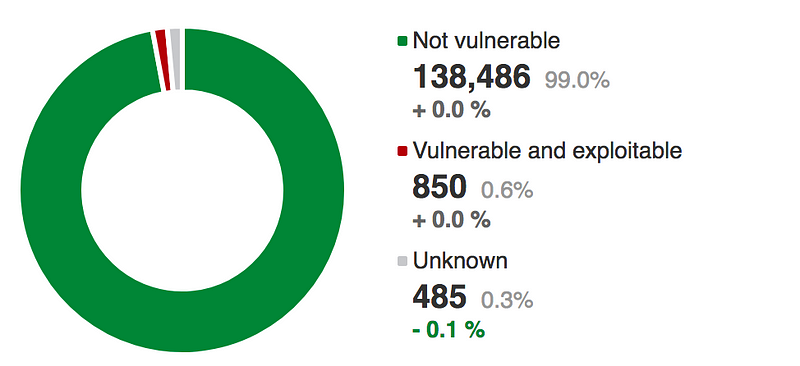

Stopping Bleichenbacher’s Attack by a ROBOT

In 2017, the ROBOT attack was announced. With this we saw the Bleichenbacher’s attack [here] return, and which exposed many Web sites to attackers. Luckily the Internet now seems fairly free of this vulnerability, with only around 2% being vulnerable. A figure of 0.6% having a weak oracle means that over the whole of the Internet, there could still be hundreds of thousands of servers at risk of ROBOT.

Avoid Being Savaged by a Poodle

The POODLE (Padding Oracle On Downgraded Legacy Encryption) vulnerability relates to how Web servers deal with older versions of the SSL protocol. Again, it now seems to be fairly well patched on servers, with only 1% being vulnerable. A figure of 0.6% for truly vulnerable sites could leave to hundreds of thousands of servers being at risk, and where the chance of finding one at random is just 1 in 167.

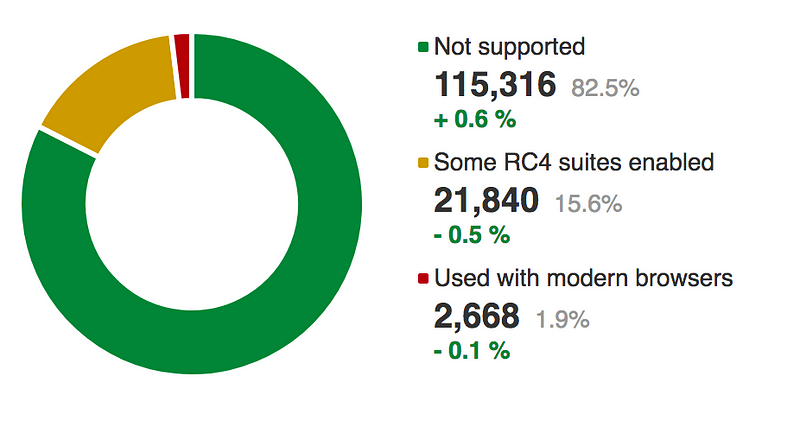

The old stream crypto — RC4

In the days before powerful computers, many computers struggled to have enough processing power and memory for cryptography, so stream ciphers were often used to ease this.

One of the most popular was RC4 (“Ron’s Cipher 4 — after Ron Rivest), as it was relatively fast compared with block ciphers, and did could run on computers with limited capacity. Unfortunately RC4 is flawed, and can be cracked. From the scan, we can see that it is still supported in around 17.5% of the sites, and could thus open up a crack on the secure communications.

Few browsers now need RC4, and most go for 128-bit or 256 bit AES as a standard for the tunnel. If you need a stream cipher, then Google is pushing ChaCha is a strong stream cipher, and which is fast and (up to now) secure.

The end of SHA-1?

The good news is that the industry has finally migrated away from SHA-1 as the method of signing digital certificates — as Google showed that it was practically possible to fake a SHA-1 signature. The survey shows that no site had a SHA-1 signature, and that the vast majority (99.8%) were using SHA-256, which would take a billions and billions of years to change a certificate and end up with the same hash signature.

Are we still DROWN’ing?

DROWN (Decrypting RSA using Obsolete and Weakened eNcryption) focuses on Web servers running SSLv2, and which shouldn’t be used these days, but the researchers found a large percentage of servers still supporting it. For this they created an attack which doesn’t actually involve an SSLv2 connection, but relates to the legacy “export” cipher suites. On the survey around 1.5% of sites are still open to the DROWN vulnerability, and thus to a breach of a site’s private keys.

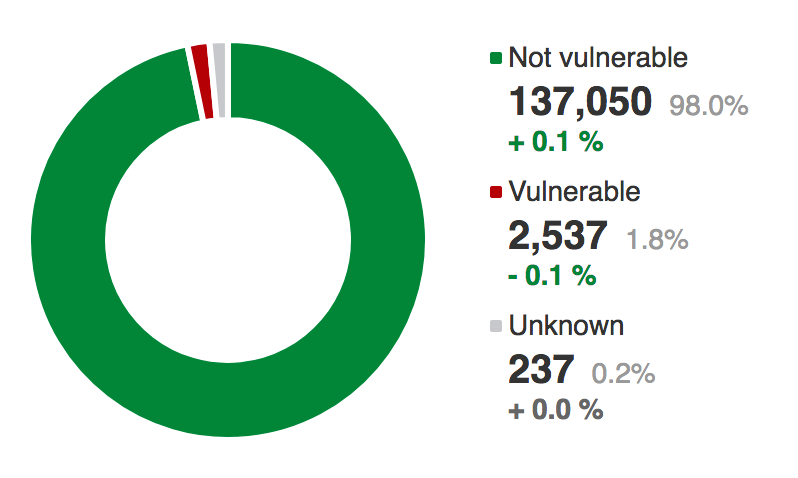

So what is CVE-2016–2107?

Every significant vulnerability gets a CVE number. Sometimes they are associated with a common name such as Heartbleed (CVE-2014–0160), BEAST (CVE-2011–3389), FREAK (CVE-2015–0204), and so on, but CVE-2016–2017 just doesn’t have a common name. Overall it focuses on a bug in OpenSSL 1.0.1 and 1.0.2, and where an attacker can perform a MITM (Man-in-the-Middle) attack on the AES encryption. In this case it can be seen at 1.8% of the sites scanned were vulnerable to this. OpenSSL is commonly used by Linux-based servers to implement the SSL/TLS connection, and bugs are common. Systems thus always be patched when a new one is found. Heartbleed was by far the worst that occurred due to an OpenSSL bug.

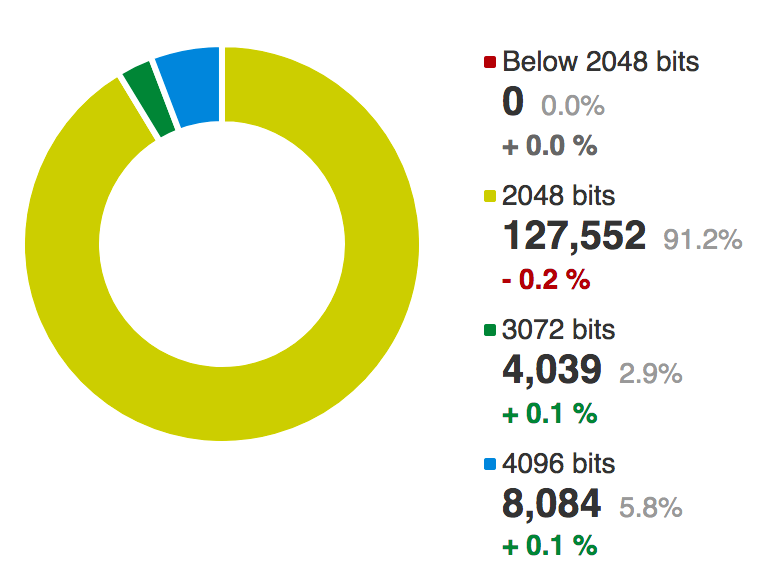

And the public keys?

With RSA we have a public key and a private key. We release the public key as an e value (normally 65,637)and an N (the modulus) value. The N value gives RSA its strength and where it is made up of two prime numbers (p and q). An intruder can thus search for the factors of N, and if they find them they will be able to discover the private key (d,N). The larger the size of the prime numbers, the more costly it will be to crack the factors.

It is well known that an RSA key which are less than or equal to 1,204 bit keys are weak against attack, and a well known attack on Adobe updates breaks Adobe’s signing, and uses a cracked 512-bit RSA private key. This then matches to a valid 512-bit public key for Adobe. The good news is that every site surveyed supported at least 2,048 bits, and there are none which have weak keys on their digital certificate.

Normally RSA is used in the key exchange method, and thus someone cracking the key will be able to discover the session key used, as the session key is sent back from the client to server using the public key of the server to protect it. The server then uses its private key to discover the key that the client want to use. TLS 1.3 gets rid of this method, and moves towards a proper key exchange method (such as ECDH).

What is surprising about this, is that some companies are so worried about the threat to their trust infrastructure, that they have now moved to whopping 4,096-bit RSA keys (5.8%).

And for the key exchange?

When a client connects to a server, it can either use RSA to pass a secret key, or use key exchange. A common method using the Diffie-Hellman key exchange technique. A shocking finding is that some sites are still using Diffie-Hellman key exchange methods which have keys that are less than 1,024 bits. The industry knows that this is a significant challenges, and 512-bit and 768-bit Diffie Hellman keys should NEVER be used. Unfortunately around 5% has key exchanges of less than 1,204 bits and another 13% had weak keys of 1,024 bits.

Weak crypto — surely not?

For weak crypto there are quite a few cryptography methods that are on the naughty step. These include MD5 (for the ease of producing signatures for data which have the same hash signature), RC4 (for known weaknesses) and DES (for small key sizes). A level of 3.5% is disappointing, as there is no need to support them, with great methods such as AES. For MD5, a researcher found that they could take three photographs and stuff them with data (but still make them viewable), and end up produce the same hash signature. That’s how bad MD5 is!

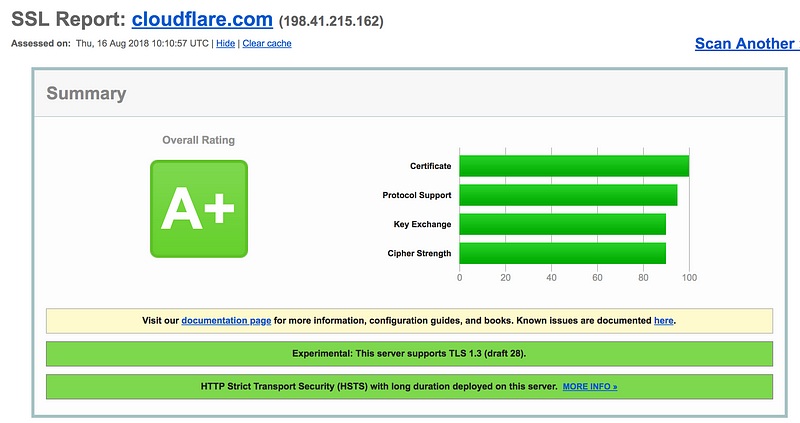

What does good look like?

Well good is typically defined by those who take security serious, such as Cloudflare [here]:

Others

Heartbleed has generally been fixed with only 64 sites reporting the bug. Also BEAST now seems to be at a negligible rate.

Conclusions

CEOs need to demand reports on a weekly basis, and if there’s a weakness on a company’s Web site, they should be held responsible, as they didn’t ask the right questions of their technical team. A failure to migrate away from SSL v2 is an embarrassment for any organisation, as their team obviously do not understand the threats they face. If so, the CEO and the administration team should be sent for training on a Web security course.

Governments and the public sector, too, cannot just say “We need to still support XP”, as the breach of a private key will cause a large scale lack of trust, along with a leak of citizen data.

Of the scans I did, far too many public sector sites gained an F grade, and many gained a T grade, which meant that Google marked them as insecure.

Google has been shouting from the rooftops for over a year, but many are still not listening. This isn’t a geeky thing, the threat is the most serious of all Internet threats: a breach of our trust infrastructure. It is serious enough to bring down a company, as it could damage the trust in their whole Web infrastructure.

A scan takes two minutes, and can be automated with Python. If your company does not trust the SSL Labs tools, in the UK, the NCSC has a whole lots of tools you can use. If you are a CEO, go scan your site now, and if it gains a T or an F, go ask some questions.

And this scan is only for those who support HTTPs, thing of all the problems that those who didn’t even have SSL/TLS enabled have. For them, Google Chrome is going to kick them off the Internet, and make as insecure.

Background

Netscape’s Legacy

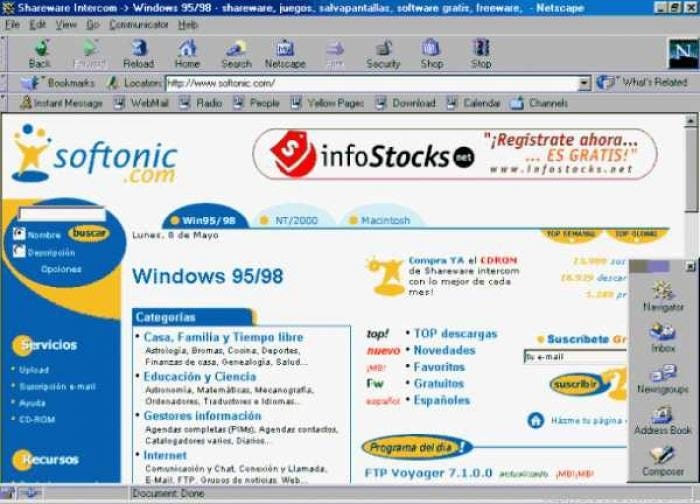

Netscape’s main product was Netscape Navigator, and it lived as a Web browser from Version 1.0 (released in 1994) to Version 4.0 (1997). It was originally known as Mosaic but they had to drop the name due to legal action from the National Center for Supercomputing Applications (who created NCSA Mosaic). It continually innovated with integrated mail in Version 2 (Netscape Mail), and then with a WYSIWYG editor in Version 3.0. With a large scale of the market, Microsoft spotted an opportunity, though, and started to erode Netscape’s market share with a free version of Internet Explorer. The last main version of the browser was Netscape 4 — Netscape Communicator:

The application of encryption to network communications traces its roots by to Netscape. They used a handshaking method and a digital certificate to create a shared key which was used by either side of the communication, and also which provided the identity of the server to the client. With this, a server sends a digital certificate to the client, and which contains its public key. If this certificate has been validated by a trusted issuer, the client can trust the identity of the server.

Netscape first defined SSL (Secure Socket Layer) Version 1.0 in 1993, and eventually, in 1996, released a standard which is still widely used: SSL 3.0. While many in the industry used it, it did not become an RFC standard until 2011 (and which was assigned RFC 6101 and defined as a historic standard).

Along with classics like IP (RFC-791), TCP (RFC-793), HTTP (RFC-1945) [here], it is an out-of-the-box classic:

There are several different versions of SSL, from 1.0 to 3.0 , and with 3.1 it changed its named to TLS (Transport Layer Socket), so that SSL 3.1 is also known at TLS 1.0. Most systems now use TLS 1.1 or 1.2, and which are free of flaws which compromised previous versions.

FREAK and Logjam

You need to watch your HTTPS connections, as your server can include 512-bit RSA keys (FREAK) or also use weak Diffie-Hellman keys (Logjam). A client can thus ask for 512-bit RSA encryption keys to be used, and the server may accepted that, or the client might only ask for 512-bit Diffie-Hellman key, and for this to be accepted by the server.

Unfortunately, with Logjam, it has been identified that 512-bit Diffie-Hellman key exchange can be easily cracked, and 1,024 bits can be cracked by nation states — and so a large nation with lots of computation resources — such as the USA and China — can read the encrypted traffic in the tunnel.

But you’ve got to watch now to lock-out older browsers, so there’s a list of ciphers that you can use, where the client tells the server which cipher types it can support and the server picks from one of these. In there, there’s a key exchange method, a tunnel encryption method, and a hash signature method.

So to test my implementation we run the famous program which caused Heartbleed (openssl.exe) in debug mode:

$ openssl s_client -connect asecuritysite.com:443

CONNECTED(00000003)

depth=2 C = US, ST = Arizona, L = Scottsdale, O = "GoDaddy.com, Inc.", CN = Go Daddy Root Certificate Authority - G2

verify error:num=20:unable to get local issuer certificate

verify return:0

---

Certificate chain

0 s:/OU=Domain Control Validated/CN=asecuritysite.com

i:/C=US/ST=Arizona/L=Scottsdale/O=GoDaddy.com, Inc./OU=http://certs.godaddy.com/repository//CN=Go Daddy Secure Certificate Authority - G2

1 s:/C=US/ST=Arizona/L=Scottsdale/O=GoDaddy.com, Inc./OU=http://certs.godaddy.com/repository//CN=Go Daddy Secure Certificate Authority - G2

i:/C=US/ST=Arizona/L=Scottsdale/O=GoDaddy.com, Inc./CN=Go Daddy Root Certificate Authority - G2

2 s:/C=US/ST=Arizona/L=Scottsdale/O=GoDaddy.com, Inc./CN=Go Daddy Root Certificate Authority - G2

i:/C=US/O=The Go Daddy Group, Inc./OU=Go Daddy Class 2 Certification Authority

---

Server certificate

-----BEGIN CERTIFICATE-----

MIIFNTCCBB2gAwIBAgIJAI9autTJeHPLMA0GCSqGSIb3DQEBCwUAMIG0MQswCQYD

...

xp58jy7OglYrxOY6vGVUkAXFd9Umaoe5A+ZFCwUNkSM57floB3m1lDs=

-----END CERTIFICATE-----

subject=/OU=Domain Control Validated/CN=asecuritysite.com

issuer=/C=US/ST=Arizona/L=Scottsdale/O=GoDaddy.com, Inc./OU=http://certs.godaddy.com/repository//CN=Go Daddy Secure Certificate Authority - G2

---

No client certificate CA names sent

---

SSL handshake has read 4340 bytes and written 570 bytes

---

New, TLSv1/SSLv3, Cipher is ECDHE-RSA-AES256-SHA384

Server public key is 2048 bit

Secure Renegotiation IS supported

Compression: NONE

Expansion: NONE

SSL-Session:

Protocol : TLSv1.2

Cipher : ECDHE-RSA-AES256-SHA384

Session-ID: 2C300000B0519D0B4DBD3EBC3FB32513F15D0270BFB4D6201707494471C60391

Session-ID-ctx:

Master-Key: EBC5DCFD0BEE85B40212E71EAA893F57E6A33DF328D67ADE515759D1EB006E6C15FAE75C04CB32AFDAB6E425D3FE43E0

So that’s ECDHE — Elliptic curve Diffie-Hellman with RSA for the key exchange, 256-bit AES is used for the encryption — no space alien will break that one — and SHA-384 for the hashing method. Nice … no space alien with a thousand quantum computers is going to crack that anytime soon. Test here.

But there’s something lying underneath, as the handshake process with secure tunnels allows a range of cipher suites to be used, so someone could come along and not pick the best one. The best way to see if your system is really doing what you have told it is to run Wireshark [View Wireshark file here]. If you have Wireshark, then go to Packet 805, and you’ll see a Client Hello message:

Next select the cipher suite, and you’ll see there are 16 possible suites, and that we are using TLS 1.2. In there you will see ECDHE as the main key exchange method. But there’s a bad one in there … one of the cipher suites on the naughty step is 0x0039 as it uses Diffie-Hellman key exchange. The server is going to like that one!

To find out what the server has selected … we then see in Packet 859 that is has gone for ECDHE:

DROWN

DROWN (Decrypting RSA using Obsolete and Weakened eNcryption) [paper] focuses on Web servers running SSLv2, and which shouldn’t be used these days, but the researchers found a large percentage of servers still supporting it. For this they created an attack which doesn’t actually involve an SSLv2 connection, but relates to the legacy “export” ciphersuites.

We normally talk about Transport Layer Security (TLS). The progress of secure sockets has generally gone through SSL v2, SSL v3 (TLS 1.0), and now onto TLS 1.1 and TLS 1.2. The first version of SSL (v1) was developed by Netscape, and was a bit of a disaster, so the first real version was SSL v2. While SSL v1 was bad, SSL v2 wasn’t particularly good either. One of the greatest flaws focused on the “export-grade ciphersuites” — in order to comply with US Export regulations, which made sure that the keys were crackable. This included an incredible small key size — such as using a 40-bit session key for the connection.

So why does SSL v2 still exist? Well all the modern browsers have aged it out, and don’t support it, but many companies still support it on their Web sites. If you’re worried about your own site you can test here:

And then there’s the open source software that you love to hate: OpenSSL. It was supposed to disable SSLv2

It was supposed to disable SSLv2 ciphersuites, but it hasn’t, and which leaves a massive hole on the Internet. The researchers even found that it was still possible to use DROWN on OpenSSL servers which had the SSLv2 option disabled — another own goal from OpenSSL. The patch for this was released “quietly” in January 2016, but many servers do not have the patch installed.

This is a worry for many companies, as they will typically be using the same digital certificate for TLS 1.2, as they have for SSL 2.0, which will mean that the private key can be cracked for the whole site. It is thus a “cross-protocol attack”, where a compromise in one protocol affects others.

The main attack against SSLv2 and TLS is related to RSA encryption padding defined as PKCS#1v1.5. Daniel Bleichenbacher defined an attack [1] on the encryption scheme that allows an intruder to decrypt the RSA ciphertext, and where the server decrypts many related ciphertexts, and the server returns whether the decryption was successful or not, and with this information the intruder can crack the stream With an SSL connection the server encrypts a pre-master secret (PMS) with its private key.

The method used to overcome this problem, is for the server to not return an error, but to pretend that everything is okay, and returns an incorrect pre-shared secret, and thus the intruder cannot use the returned values to crack the session key. The solution was TLS 1.2 [here].

If the intruder, though, sends two correctly defined ciphertexts, and receives back the same PMS, they know that the ciphertext is correct, but if they receive two different ones, they can determine that it was incorrect. This then allows for a resurrection of the Bleichenbacher attack.

In TLS, the specification was changed so that the PMS is scrambled, so that it changes each time, but this is not the case for SSLv2, which has a weaker shield against Bleichenbacher attacks. Its weakness is that, in export modes, that the Master key is only 40 bits long, and can thus be cracked by brute force. The researchers, in this case, used GPUs in the Amazon Cloud to crack them.

A crack on SSLv2 will often crack the TLS versions too, as the same RSA private key is used for both. It has been calculated that it will work in one in a thousand TLS connections, so there are 1,000 TLS handshakes, to find one RSA ciphertext. It then requires around 40,000 queries to a server, and about 2 to the power of 50 offline operations (costing $440 on Amazon EC2 Cloud).

This is why we see a log of:

While this method of DROWN costs $440 to crack the private key, some researchers are already proposing that it may be possible to get it down to just a single minute of CPU time (on a single core). This uses a bug in the way that OpenSSL handles the SSLv2 key exchange (which was fixed in March 2015). The nightmare scenario for this is that large parts of the Internet would be exposed, and where private keys could be cracked within minutes (extended DROWN).

The researchers in the paper found more than more than 3,500,000 Web servers which were vulnerable to DROWN, and over 2 million to the extended DROWN method.

POODLE

POODLE

Few things can be kept secret within computer security, and it has been the case with a flaw in SSLv3, where there were rumors of a forthcoming announcement. So it happened on Tuesday 14 October 2014 that Bodo Möller (along with Thai Duong and Krzysztof Kotowicz) from Google announced a vulnerability in SSLv3, and where the plaintext of the encrypted content could be revealed by an intruder. The flaw itself has been speculated on for a while, and this latest announcement shows that it can actually be used to compromise secure communications.

The vulnerability was named POODLE (Padding Oracle On Downgraded Legacy Encryption) attack, and it relates to the method that Web servers deal with older versions of the SSL (Secure Socket Layer) protocol.

Get Best Software Deals Directly In Your Inbox